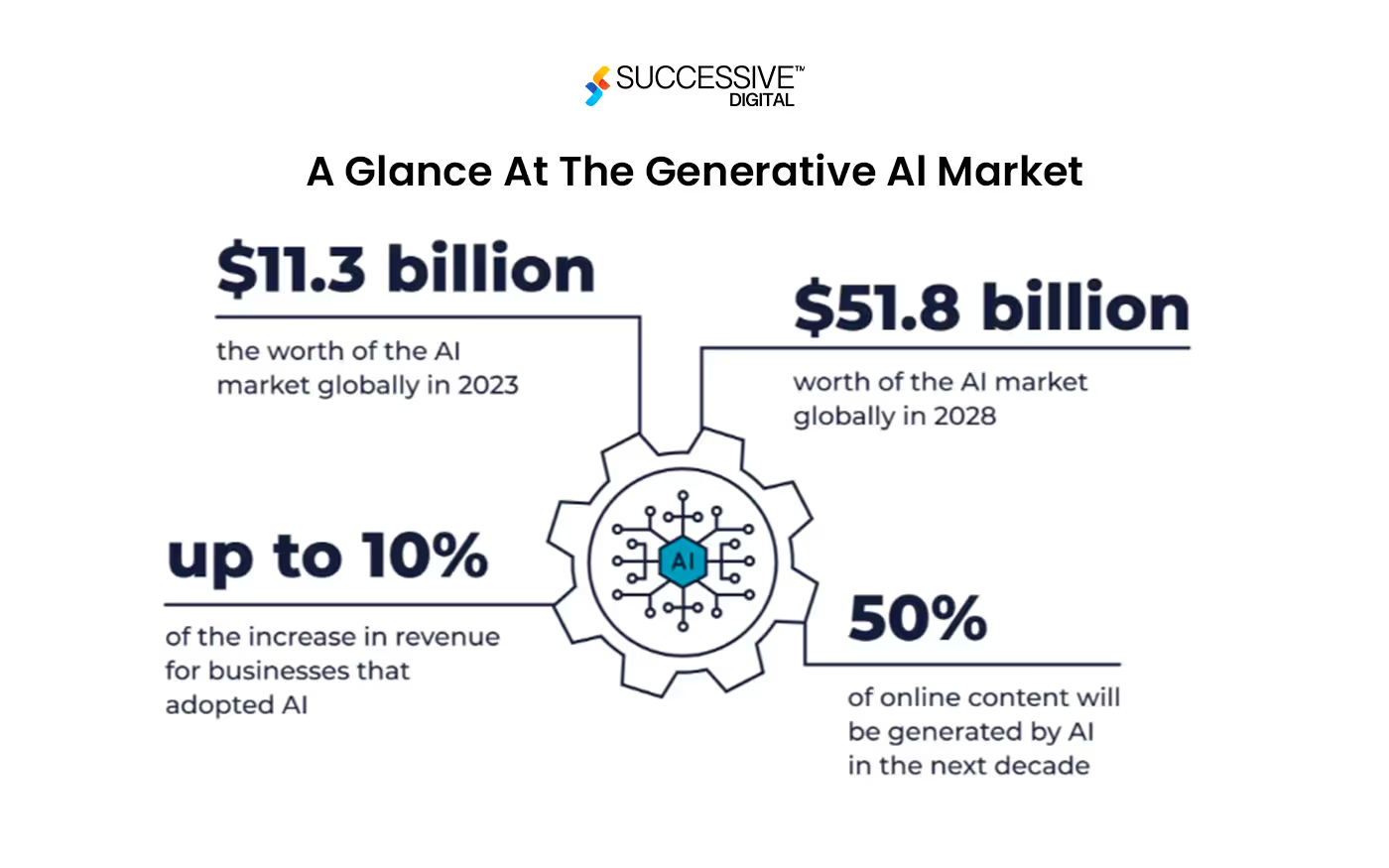

Integrating Generative AI is a standout opportunity for companies trying to stay ahead of the business curve since it can change industries and provide novel solutions. It can be used to develop advanced products, create captivating marketing campaigns, and optimize intricate workflows, all of which have the potential to completely change the way we live, work, and perform business initiatives. Generative AI, as the name implies, can generate a vast array of content, including text, images, music, code, video, and audio. Although the idea is not new, generative AI has reached new heights with recent developments in machine learning methods, especially with transformers. This makes it clear that adopting this technology is not just beneficial but necessary for long-term success in today's corporate environment. However, the potential of Generative AI can vary from business to business. Its capability to be trained for specific business use cases makes it reliable and promising for various industries; however, you need a generative AI consulting company to integrate Gen AI into your business. This blog will provide information on creating a custom gen AI solution for your specific requirements.

What is Generative AI?

Generative AI is an advanced approach to machine learning focused on enabling computers to autonomously create new content, including text, images, videos, and audio. By training on specific datasets, these tailored AI models learn patterns, retain them, and use this knowledge to produce original outputs. Generative AI came into the picture back in the 1950s, beginning with basic neural networks and rule-based systems aimed at emulating human thought and decision-making processes.With time, technology advanced and deep-learning neural networks have been introduced. Early models like Bayesian and Markov were instrumental in computer vision and robotics. However, the popularity of generative AI began in 2014 with the introduction of Generative Adversarial Networks (GANs) and accelerated in 2017 with transformers.

Modern generative AI tools, such as ChatGPT, Midjourney, DALL-E, and Bard, utilize neural networks—including diffusion networks, GANs, transformers, and variational autoencoders—to generate human-like content. Each model has its unique approach: diffusion networks reverse-engineer realistic data from noise, GANs use a dual-model system to refine outputs, transformers enhance language models with attention mechanisms, and variational autoencoders create new content based on probabilistic representations of training data.

Why Should you invest in developing a Custom Gen AI Solution?

Each business has its own goals and issues; therefore, AI solutions must be customized to meet particular demands adequately. Developing a bespoke Generative AI solution can give your company a competitive edge by giving you access to a highly customized toolkit to address specific issues unique to your business. The following justifies your company's decision to use a specially created AI solution:

- High-Quality Predictions

Tailored artificial intelligence solutions are designed to process specific data sets and deliver exact insights that match your business use case. A custom-gen AI solution is designed to comprehend and interpret your particular data instead of off-the-shelf options, guaranteeing that forecasts and suggestions are extremely precise and relevant. Due to this specialization, improved decision-making and operational efficiency catered to your unique requirements.

- Competitive Advantage

Developing a custom gen AI solution can give your company a competitive edge. Consider how Uber's driverless car technology or Netflix's recommendation system distinguish these businesses in their respective markets. Similarly, a well-thought-out AI solution can set your company apart and establish it as an innovator. Additionally, you can make money by licensing your particular solution to other businesses if it benefits the entire industry.

- Long-Term Gains

Custom-gen AI solutions provide long-term benefits, while pre-built solutions could provide temporary solutions. An AI solution specifically designed for your company may be modified and improved as it grows to meet new opportunities and problems. Creating a tailored solution also increases internal knowledge, giving your team the tools they need to take on AI initiatives in the future confidently.

- Built for Purpose

Off-the-shelf AI technologies' predefined settings must adequately meet particular companies' needs. They are made to efficiently carry out a broad range of duties yet frequently fail to meet specific needs. On the other hand, a custom gen AI solution can be adjusted to fit the complexities of your business operations and is designed to meet your desired targets, guaranteeing optimal performance and alignment with your objectives.

Generative AI Integrations for Custom AI Solutions

Businesses have utilized AI and ML for years; Gen AI has brought evolution like never before. With recent advancements, businesses can utilize tools that allow organizations to create Generative AI solutions specifically adapted to the needs of their sector. Let’s further understand possible generative AI integration for creating custom AI solutions:

- Predictive Analytics for Strategic Decision-Making

Predictive analytics leverages historical data to forecast future trends, enabling businesses to craft proactive and adaptable strategies. For example, Amazon uses generative AI algorithms to predict customer demand, optimize inventory management, and reduce costs. By integrating Generative AI with existing ML models, Amazon accurately forecasts stock levels and purchasing behaviors, resulting in a highly efficient supply chain that aligns closely with consumer demands.

- Enhancing Customer Experience (CX) through Personalization

Businesses can now deliver personalized experiences at scale by integrating Generative AI into their CX strategies. For example, Starbucks utilizes Generative AI to analyze customer data collected via its mobile app. This analysis enables the company to offer tailored product recommendations and promotions, significantly boosting customer engagement and satisfaction. By processing vast amounts of consumer preferences and purchase histories, Generative AI models can continually refine these recommendations, ensuring they remain relevant and compelling.

- Automating Complex Processes with AI

Generative AI transforms process automation by streamlining repetitive tasks and improving operational efficiency. Siemens, a global leader in industrial manufacturing, uses Generative AI to optimize production processes. The AI models predict maintenance needs, monitor equipment performance, and adjust production schedules autonomously. By automating these complex tasks, Siemens minimizes downtime and enhances overall production efficiency, demonstrating the potential of Generative AI in industrial settings.

- AI-Driven Market Research and Consumer Insights

Generative AI-powered platforms can analyze large datasets—such as market reports, customer feedback, and social media trends—to extract actionable insights. For example, Coca-Cola leverages Generative AI to monitor customer sentiment across various social media channels. This real-time analysis allows Coca-Cola to identify emerging trends, evaluate the impact of marketing campaigns, and adapt its strategies to align with shifting consumer demands and market conditions.

- Strengthening Cybersecurity with AI

As cyber threats become increasingly advanced and frequent, traditional cybersecurity measures often need to be revised. Generative AI offers a proactive approach to threat detection and mitigation. IBM’s Watson for Cyber Security is a prime example of how advanced AI can enhance security protocols. Watson’s AI models can sift through vast amounts of unstructured data to detect anomalies that may signal a cyberattack. By incorporating Generative AI into its cybersecurity framework, IBM provides clients with a more resilient defense mechanism, ensuring the protection of sensitive data and compliance with regulatory standards.

How does Generative AI work?

The working of generative AI depends on how comprehensively it has been trained on complex datasets. Generative AI works precisely like a human brain remembrance mechanism. If you are thinking of developing a custom gen AI solution, you must understand the working mechanism of Generative AI. It is a continuously evolving space whose possibilities are yet to be uncovered. Let’s know the working mechanism of Generative AI:

- Data Ingestion and Preprocessing

Input DataGenerative AI systems start with large datasets, often unstructured, coming from various sources like EHRs, financial transactions, or customer interactions in retail.PreprocessingData is cleaned, normalized, and structured to make it suitable for training. This step involves removing noise, handling missing values, and transforming data into formats that neural networks can process. For example, in healthcare, data from patient records would be anonymized and categorized to protect privacy while retaining useful patterns.

- Model Training and Neural Networks

Neural NetworksNeural Networks are the backbone of generative AI, including key architectures like Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), Transformers, and Diffusion Models.GANsUtilized in scenarios requiring high-quality, realistic output, such as generating synthetic medical images for diagnostic training or financial data for predictive modeling.TransformersParticularly important in natural language processing tasks, such as automating customer support in retail or analyzing financial documents. Transformers leverage the attention mechanism to prioritize relevant data features, ensuring accurate outcomes even with large datasets.VAEs and Diffusion ModelsApplied in scenarios where probabilistic outputs are necessary, like in predictive analytics for risk assessment in finance or personalized treatment planning in healthcare.Training ProcessModels are trained using vast datasets to identify patterns and correlations. For example, in retail, a model could be trained to understand customer behavior patterns to predict purchasing trends and improve inventory management.

- Inference and Content Generation

Inference EnginePost-training, the model uses the learned patterns to generate new content or predictions. For instance, in healthcare, this could mean generating personalized treatment recommendations based on patient history.Output LayerThe final stage where the generated content—whether a report, image, or prediction—is produced. This content must align with business objectives, such as improving customer engagement in retail or ensuring compliance with financial regulations.

- Data Privacy, Security, and Compliance

Encryption and AnonymizationCritical for industries like healthcare and finance, where data privacy is paramount. The architecture includes mechanisms to anonymize sensitive data and encrypt information both at rest and in transit.Compliance ModulesIntegrated to ensure that all AI processes adhere to industry-specific regulations like HIPAA in healthcare, GDPR in international operations, or FINRA in finance. These modules regularly audit and monitor AI outputs to ensure compliance.If you want to know how generative AI improves customer experience, read this: Generative AI in Customer Experience.

Types of Generative AI Model

- Generative Adversarial Networks (GAN)

GANs are made up of two neural networks—a generator and a discriminator—that compete against each other in a step-like game. The generator creates synthetic data (e.g., images, text, and sound) from random noise, whereas the discriminator discriminates between actual and fake data. The generator fools the discriminator by producing increasingly realistic data while the discriminator develops its capacity to distinguish between genuine and created data. With this approach, GANs can generate very realistic content, and they've been utilized successfully in picture synthesis, art creation, and video generation.

- Transformer Based Models

Transformers like the GPT series have become increasingly popular in natural language processing and generative tasks. They use attention mechanisms to properly simulate the interactions between various items in a sequence. Transformers can handle extended sequences, making them ideal for creating cohesive and contextually relevant content.

- Variational Autoencoders (VAEs)

VAEs are generative models that learn to encode data into a latent space before decoding it to recreate the original data. They learn probabilistic representations of the input data, which allows them to produce new samples based on the learned distribution. VAEs are often used in image production jobs but have also been used to generate text and audio.Want to read more about Gen AI models- please refer to this blog- The Impact of Generative AI on the BFSI Sector

Tech Stack for Custom Gen AI Solutions

Category Tools & TechnologiesWhy to choose?Generative Model Architectures GANs, VAEsEssential for creating complex and advanced generative models capable of generating high-quality outputs.Data Processing NumPy, Pandas, spaCy, NLTKEfficient data manipulation and preprocessing are essential for preparing data for training GenAI models.GPU AccelerationcuDNN, NVIDIAEnables high-performance computations essential for training deep learning models.Programming LanguagePythonSimplicity & Extensive Library support are highly utilized for AI and machine learning.Deep Learning FrameworkTensor Flow, PyTorchOffers extensive tools for developing and training neural networks, known for scalability and flexibility.Cloud Services AWS, Azure, GCPOffers scalable and secure infrastructures for deploying generative AI solutionsModel DeploymentDocker, Kubernetes, Flask, FastAPISupport scalable and reliable deployment of tailored AI models into production environments.Web FrameworkFlask, DjangoEnable web application development and APIs for Gen AI model integrationDatabasePostgreSQL, MongoDBEnables secure data storage solutions capable of handling large volumes of structured and unstructured data.Automated Testing PyTestEnables automated testing to ensure the accuracy and reliability of the generative AI modelVisualization Matplotlib, Seaborn, PlotlyEnsures the visualization of data and model results, aiding in the interpretation and analysis of AI outputs.Version ControlGitHub, GitLabEnsures collaboration, version control, and continuous integration in AI/ ML projects.

Developing Custom AI Solutions - Step By Step

To build a custom generative AI solution, one must thoroughly understand the technology and the particular issue that needs to be resolved. It entails frequently creating and training AI models to produce creative outputs from raw data by optimizing a specific statistic. A good generative AI solution requires a number of critical actions, including describing the problem, gathering and preprocessing data, choosing relevant algorithms and models, training and fine-tuning the models, and implementing the solution in an actual space. Let’s understand the development process of custom gen AI solution step by step:

Step 1: Defining the problem and Objective setting

Developing custom gen AI solutions begins with a well-defined need or issue. The first stage describes the exact difficulty, such as writing text in a specific style, drawing pictures within predetermined parameters, or mimicking particular sounds or music. For each of these problems, a specific strategy and matching dataset are required. Defining the problem in detail and outlining the intended results comes next. This could involve choosing the languages to use or figuring out how creative the writing has to be. Certain aspects of image production, such as aspect ratio, resolution, and creative style, must be selected because they will affect the complexity and amount of data required for the model.Once the problem and output needs have been determined, it is imperative to delve deeply into the underlying technology. This entails choosing the exemplary neural network architecture, such as Transformer-based models like GPT for text problems or Convolutional Neural Networks (CNNs) for tasks involving images. Knowing the advantages and disadvantages of the selected technology is crucial. For instance, GPT-3 does a great job producing a variety of texts, but it may need help maintaining consistency throughout lengthier stories. This emphasizes the significance of having reasonable expectations. Ultimately, the secret to getting the desired performance for custom gen AI solutions is defining quantitative metrics. Metrics such as BLEU or ROUGE scores can assess the coherence and relevance of the generated text. The Inception Score or Frechet Inception Distance can evaluate the diversity and quality of images. These metrics offer a practical means of evaluating the model's effectiveness and directing future improvements.

Step 2: Data collection and management

Securing an extensive and high-quality dataset before training your AI model for a custom gen AI solution is essential. This process begins by identifying and sourcing data from diverse, reliable origins such as databases, APIs, sensor outputs, or custom collections. The choice of these sources directly impacts the model's authenticity and performance. Generative AI models, in particular, require vast and varied datasets to produce diverse and accurate outputs. For instance, training a model to generate images necessitates a dataset rich in variations—such as different lighting, angles, and backgrounds—to ensure the model can generalize across scenarios.Data quality and relevance are critical; the dataset must accurately represent the tasks the model will perform. Any noise, inaccuracies, or low-quality data can degrade model performance and introduce biases. Therefore, a thorough data cleaning and preprocessing phase is essential, involving tasks like handling missing values, removing duplicates, and ensuring the data meets the specific format requirements of the model, such as tokenized text or normalized images.Another crucial aspect is managing copyrighted and sensitive information. The risk of inadvertently collecting such data is mitigated by using automated filtering tools and conducting manual audits, ensuring compliance with legal and ethical standards. Adherence to data privacy laws like GDPR and CCPA is mandatory, which includes anonymizing personal data, allowing opt-outs, and securing data through encryption and secure storage.As the model evolves, data versioning and management become essential for a custom gen AI solution. Implementing tools like DVC ensures that changes in data are tracked systematically, enabling reproducibility and consistent model development.

Step 3: Data processing and labeling

Once data is collected, it undergoes a refinement process to prepare it for AI model training. This begins with data cleaning, where inconsistencies, missing values, and errors are eliminated using tools like pandas in Python. Text data involves removing special characters, correcting spelling errors, or handling emojis. Following this, normalization or standardization is applied to ensure that no single feature disproportionately influences the model due to its scale. Techniques such as Min-Max Scaling or Z-score normalization are commonly used to bring data to a consistent range or distribution.Data augmentation plays a critical role in enhancing the dataset's richness, particularly for models in computer vision. The dataset is artificially expanded by applying transformations like rotations, zooming, or color variations, improving the model's robustness and preventing overfitting. In the context of text data, augmentation involves synonym replacement or sentence shuffling, introducing variability and depth to the training data.Feature extraction and engineering are also vital, as raw data often isn’t suitable for direct input into models. This could involve extracting edge patterns for images, while for text, it can mean tokenization or using embeddings like Word2Vec or BERT. Audio data might require spectral features such as Mel-frequency cepstral coefficients (MFCCs) for voice recognition or music analysis. Effective feature engineering enhances the data's predictive power, making models more efficient.Data splitting is essential for model evaluation and involves dividing the dataset into training, validation, and test subsets. This approach allows for fine-tuning without overfitting, enables hyperparameter adjustments, and ensures the model's generalizability through testing on unseen data.In a custom gen AI solution, data labeling is a crucial step, particularly for supervised learning tasks. This process involves annotating data with correct categories or answers, such as labeling images with objects they depict or tagging text data with sentiment. Although manual labeling can be time-consuming, it’s vital for model accuracy. Semi-automated methods, where AI pre-labels and humans verify, are increasingly used to improve efficiency without compromising label quality.Ensuring data consistency, especially for time-series data, involves timestamp synchronization and filling gaps through interpolation methods. In the case of text data, embeddings and transformations are crucial, converting words into vectors that capture semantic meanings. Pre-trained embeddings like GloVe, FastText, or BERT often provide dense vector representations, further refining the data for model training.

Step 4: Choosing a foundational model

With the data prepared, the next step in developing a custom-gen AI solution is selecting a foundational model, such as GPT-4, LLaMA-3, Mistral, or Google Gemini. These models offer pre-trained capabilities that can be fine-tuned to meet the specific requirements of your project. This flexibility empowers you to adapt the model to your needs, saving time and computational resources. Foundational models are large-scale pre-trained models trained on vast datasets that enable them to capture a wide range of patterns, structures, and domain-specific knowledge. Leveraging these models allows developers to tap into their inherent capabilities and customize them further to align with the task.Several factors must be considered when selecting a foundational model for a custom-gen AI solution. The nature of the task is crucial; for example, GPT models are particularly well-suited for generating coherent, contextually relevant text, making them ideal for applications like content creation, chatbots, or code generation. On the other hand, LLaMA could be the better choice for tasks that require multi-lingual capabilities or cross-language comprehension. The compatibility between the model and your dataset is also crucial. A model primarily designed for text, like GPT, may not be appropriate for tasks like image generation. In contrast, a model such as DALL-E 2, created explicitly for generating images from text descriptions, would be more fitting.Additionally, the size of the model and its computational requirements are important considerations. More extensive models like GPT-4, with billions of parameters, offer exceptional performance but demand significant computational power and memory. Depending on your available resources, opting for a smaller model or different architecture can be necessary to balance performance and feasibility. The model's ability to generalize knowledge from one task to another, known as transfer learning, is another critical factor. Some models, such as BERT, are particularly effective at fine-tuning with relatively small datasets, allowing them to perform various language processing tasks.Finally, the community and ecosystem surrounding a model can significantly influence the choice. Models with strong community support, like those backed by Hugging Face, offer extensive libraries, tools, and pre-trained models that can simplify the processes of implementation, fine-tuning, and deployment. This robust support network reduces development time and improves efficiency and provides reassurance, making the foundational model a key element in successfully deploying custom gen AI solutions.

Step 5: Fine-tuning and RAG

Fine-tuning and Retrieval-Augmented Generation (RAG) are essential techniques for refining custom gen AI solution to produce high-quality, contextually appropriate outputs. Fine-tuning is a critical step where a pre-trained model is adapted to specific tasks or datasets, enhancing its ability to generate relevant and nuanced outputs. This process typically begins by selecting a foundational model aligned with the generative task—such as GPT for text or a Convolutional Neural Network (CNN) for images. While the model's architecture remains the same, its weights are adjusted to reflect the new data's nuances better. Fine-tuning involves-

- data preparation, where the data is carefully processed and formatted;

- model adjustments to tailor the final layers for specific output types and

- parameter optimization that fine-tunes learning rates and layer-specific adjustments.

- Regularization techniques, like dropout or weight decay, prevent overfitting, ensuring the model generalizes well to unseen data.

Retrieval-augmented generation (RAG) plays a key role in the generative AI model development process by integrating the retrieval and generation phases. During the retrieval phase, the model searches through a database of organizational documents to locate information relevant to a user's query. This phase leverages techniques like semantic search, which interprets the underlying intent of queries to find semantically related results. The retrieval process is powered by embeddings, which convert text into vector representations using models like BERT or GloVe. These vectors are stored in scalable databases provided by vendors such as Pinecone or Weaviate. Document chunking further refines the retrieval process by breaking large documents into smaller, topic-specific segments, improving the accuracy and relevance of the retrieved information.Once the relevant information is retrieved, it is used to augment the generative process. This enables the custom gen AI solution to produce contextually rich responses by integrating retrieved data into the generation phase. General-purpose large language models (LLMs) or task-specific models can generate responses directly informed by the retrieved content, ensuring accuracy and relevance. Incorporating RAG into the development of generative AI applications ensures that the model produces high-quality outputs in a way deeply informed by the specific context provided by the retrieval system. The effectiveness of an RAG system lies in its ability to dynamically combine deep understanding from retrieved data with advanced generation capabilities, enabling the model to address complex user queries with precision and contextual accuracy.

Step 6: Model evaluation and refinement

Analyzing an AI model's performance after training is essential for your custom gen AI solution; your knowledge as a data scientist, machine learning engineer, or AI researcher is helpful. It guarantees that the model produces the intended results and operates dependably in various situations. This evaluation procedure establishes a standard for the model's accuracy by comparing AI-generated outputs to accurate data. The model's efficacy is measured using key measures, like BLEU and ROUGE scores for text-based tasks or Frechet Inception Distance (FID) for generative tasks. Understanding the convergence and overall performance of the model also depends on keeping an eye on the loss function, which measures the discrepancy between expected and actual results. Evaluation is but one phase in an ongoing cycle of improvement. Over time, the model is iteratively refined and enhanced, frequently by adjusting to fresh information or user input. Model performance is greatly improved by hyperparameter tweaking, which entails optimizing variables like learning rates and batch sizes. Additionally, based on the evaluation's findings, architectural changes like changing layers or neurons can be required. Transfer learning is sometimes used to enhance performance on particular tasks using pre-trained weights from a different model. They are aware of the convergence and general performance of the model. To find biases or inaccuracies that quantitative measurements could overlook, generated outputs are manually examined as part of the refinement process, which also involves qualitative analysis. Regularization strategies ensure the model performs effectively when applied to fresh data by reducing overfitting and boosting dropout rates. Moreover, incorporating a feedback loop facilitates ongoing enhancement and modification as users or systems offer feedback on the model's outputs. In production settings, tracking data drift is crucial to maintaining the model's accuracy and applicability as data patterns change. Lastly, by subjecting the model to adversarial cases, adversarial training—especially in the context of Generative Adversarial Networks (GANs)—strengthens the model and produces more dependable and resilient performance. In the end, model evaluation for a custom gen AI solution offers a moment in time of existing capabilities; however, continuous improvement is required to sustain and improve the model's efficacy.

Step 7: Deployment and monitoring

Implementing a tailored AI model in a production setting is intricate and goes beyond technical issues, emphasizing moral values like accountability, transparency, and justice. Establishing the necessary infrastructure is the first step for deploying a custom gen AI solution. Depending on the size and complexity of the model, this can call for advanced hardware like GPUs or TPUs. Scalable model deployment is facilitated by services like SageMaker, AI Platform, and Azure Machine Learning, provided by cloud platforms like AWS, Google Cloud, and Azure. Containerization, which uses tools like Docker, ensures consistency between environments. Orchestration tools like Kubernetes manage and scale these containers to meet demand. Models are frequently deployed behind APIs so that apps and services can access them through frameworks like Flask or FastAPI. The deployment procedure heavily weighs ethical factors. Anonymization is critical in protecting data privacy, particularly when handling sensitive user data. To ensure equity for all user groups, bias tests must be conducted to find and address any unintentional biases the model may have picked up during training. Open channels are established for users to voice complaints or ask questions, and comprehensive documentation of the model's capabilities, constraints, and expected behaviors is maintained to ensure transparency. Maintaining the model's efficacy, precision, and moral rectitude requires constant observation. Monitoring tools track real-time performance parameters, including error rates, latency, and throughput. Alarms are set up to detect any irregularities in these data. Feedback loops are designed to collect user feedback on model results, assisting in identifying problems and potential improvements. Because changes in the type of incoming data might impact the model's performance, it is imperative to keep an eye out for model drift. TensorFlow Data Validation is one tool that may be used to find these drifts. User experience (UX) monitoring is essential for generative AI applications that interact directly with users, such as chatbots or AI-driven design tools. Understanding user interactions can guide improvements to better meet user needs. Periodic re-training cycles, informed by feedback and monitored metrics, are necessary to maintain the model's accuracy and relevance. Detailed logging and audit trails ensure traceability and accountability, particularly in critical applications.Ethical monitoring systems are implemented to detect any unintended consequences or harmful behaviors of the AI, with guidelines and policies continuously updated to prevent such occurrences. Security measures, including regular vulnerability checks, data encryption, and proper authentication mechanisms, are essential to protect the deployment infrastructure.Overall, deploying a tailored AI model is a multifaceted process that requires a careful balance of technical execution and ethical responsibility. Continuous monitoring and refinement ensure that the AI solution remains functional, responsive, and aligned with technical and ethical standards in real-world scenarios.

Best Practices to follow while developing a Custom Gen AI Solution

- Define Clear Objectives: During design and development, precisely outline the problem and goals to align the solution with desired outcomes.

- Gather High-Quality Data: Use clean, relevant data for model training to enhance accuracy and relevance.

- Use Appropriate Algorithms: Select and test algorithms to identify the best performer for the specific problem.

- Create a Robust and Scalable Architecture: Build an architecture with distributed computing, load balancing, and caching for scalability and reliability.

- Optimize for Performance: Implement caching, data partitioning, and asynchronous processing to enhance speed and efficiency.

- Monitor Performance: Continuously track performance using profiling tools, log analysis, and metrics to detect and resolve issues.

- Ensure Security and Privacy: Implement encryption, access control, and data anonymization to safeguard security and user privacy.

- Test Thoroughly: Conduct extensive testing in diverse scenarios to ensure quality and reliability.

- Document the Development Process: Maintain thorough code, data, and experiments documentation to ensure transparency and reproducibility.

- Continuously Improve the Solution: Iterate based on user feedback, performance monitoring, and by adding new features and capabilities.

Read about Enterprise AI- Applications, Benefits, Challenges, & More- to understand the versions of artificial intelligence and to know what enterprise AI customization is.

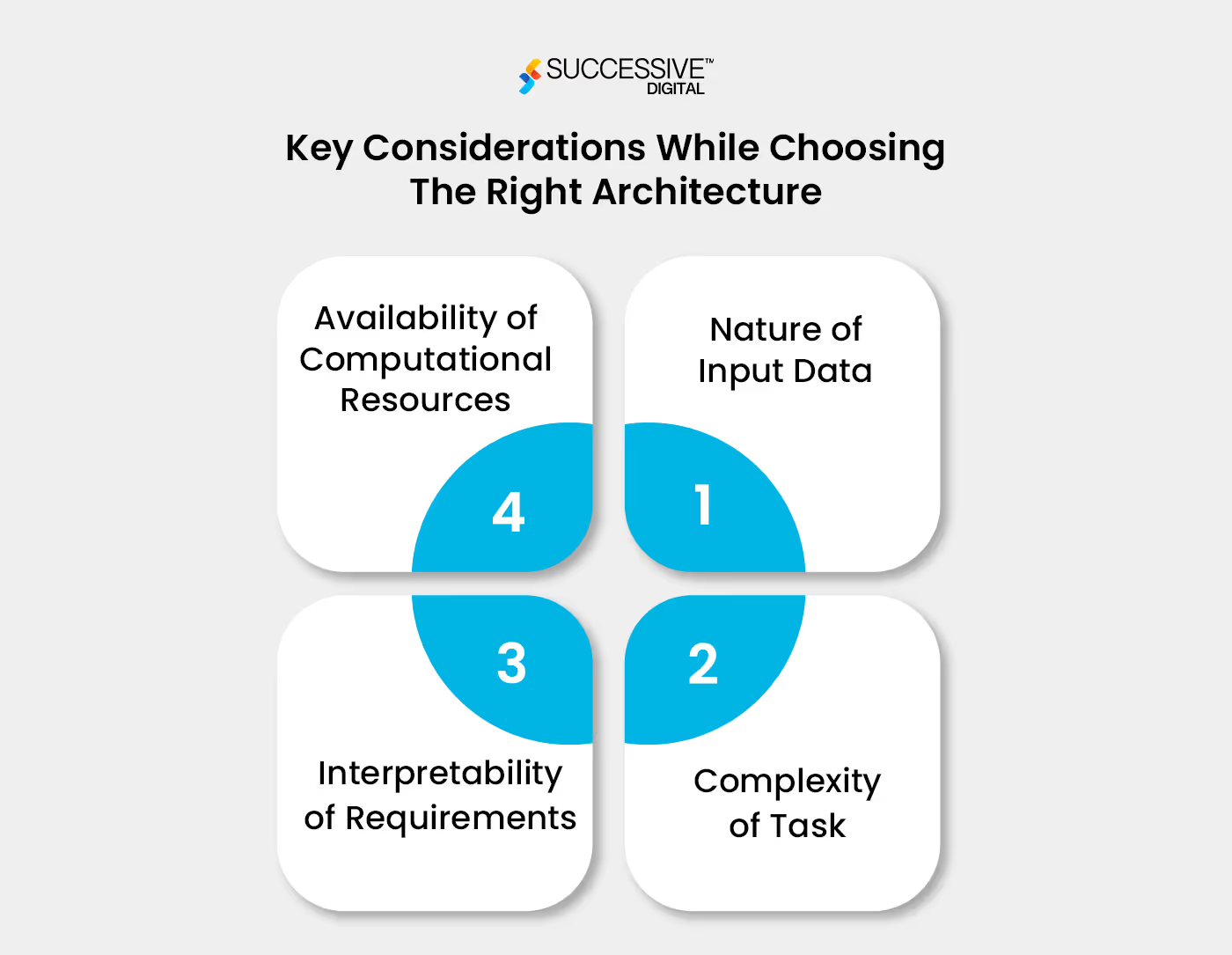

Key Considerations While Choosing The Right Architecture

- Nature of Input Data

For tasks involving sequential data such as text, speech, or time series, Recurrent Neural Networks (RNNs) are optimal due to their ability to process and model temporal dependencies where the order and context from previous time steps are essential. Variants such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks are particularly adept at managing long-term dependencies and addressing challenges like vanishing gradients. Conversely, for spatial data tasks like image analysis or spatial pattern recognition, Convolutional Neural Networks (CNNs) are preferred. CNNs are engineered to identify spatial hierarchies and patterns within images through a series of convolutional layers, pooling operations, and non-linear activation functions. They excel in image classification, object detection, and image generation applications.

- Complexity of Task

Basic architectures such as simple Recurrent Neural Networks (RNNs) for text generation or shallow Convolutional Neural Networks (CNNs) for image processing are often adequate for simple generative tasks. However, tasks demanding a profound comprehension of patterns and intricate features typically require more advanced architectures. For example, generating high-resolution images or complex text may necessitate deeper CNNs or advanced RNN variants like Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs). The complexity of the chosen architecture should be aligned with the task's complexity to capture and model nuanced details effectively.

- Interpretability of Requirements

In situations where understanding the model’s decision-making process is essential, the interpretability of the architecture plays a crucial role. Recurrent Neural Networks (RNNs), due to their ability to process sequential data, often provide better interpretability in tasks like text generation, where it is important to trace the impact of previous inputs. In contrast, Convolutional Neural Networks (CNNs, despite their effectiveness in handling spatial data, may be less interpretable due to their complex feature extraction mechanisms. Although techniques such as activation map and saliency map visualizations can help interpret CNN models, these methods typically do not offer the same level of clarity as the insights gained from RNNs.

- Availability of Computational Resources

When facing resource constraints, choosing less demanding architectures in terms of computational power is crucial. Convolutional Neural Networks (CNNs) or more simple configurations of Recurrent Neural Networks (RNNs) can perform effectively without requiring extensive resources. It's also essential to evaluate the model's efficiency during inference and deployment to ensure practicality. Conversely, more complex architectures can be utilized in high-resource environments, such as deeper CNNs with multiple layers for detailed feature extraction or advanced RNNs with improved memory capabilities. Ample computational power enables experimentation with advanced models and larger datasets, optimizing performance and model capabilities. We also help you to optimize your existing AI models; let’s understand more about AI model optimization and why it is important.

AI Model Optimization

Businesses across industries are developing custom-gen AI solutions and are looking forward to exploring the capabilities of generative AI, but the process doesn't end when the model is successfully deployed. Optimizing the AI model to ensure it delivers the best results as efficiently as possible is also essential. Model drifting and operational inefficiency can plague AI models. Hence, utilizing right optimization strategies can help AI/ML engineers improve performance and mitigate issues.

Why Optimize?

Optimization is essential in AI model development to enhance performance, speed, and efficiency. Effective optimization ensures that models run faster with lower latency, require less computational power, and use available resources better. This is especially vital for deployment in resource-constrained environments or real-time applications where efficiency directly impacts the user experience and operational costs. But how is optimization performed, and what techniques are utilized to optimize the AI model? Let’s understand further:

Quantization Techniques

Quantization techniques, such as Post-training Quantification (PTQ) and Quantization-Aware Training (QAT), are critical for reducing model size and increasing inference speed. PTQ transforms a trained model's weights and activations from floating-point precision to lower-bit-width representations, such as int8, without retraining. This minimizes the model's memory footprint and accelerates inference. In contrast, QAT incorporates quantization into the training process, allowing the model to adjust to limitations while maintaining accuracy after quantization. Both strategies seek to improve models for use on hardware with low-precision support.

Pruning and Regularization

Pruning strategies eliminate redundant or less relevant parameters from the model, resulting in a sparser network with lower computational and memory requirements. Weight pruning, in which weights with little impact on output are canceled out, and structured pruning, in which entire neurons or channels are removed, are standard techniques. Regularization approaches, such as dropout and L2 regularization, prevent overfitting by inhibiting the model from becoming too complex, improving its generalizability. Pruning and regularization work together to make tailored AI models more efficient and robust.

Knowledge Distillation

Knowledge distillation involves training a smaller, simpler model (the student) to mimic the behavior of a larger, more complex model (the teacher). The smaller model is trained to replicate the teacher model’s outputs or internal representations, allowing it to achieve similar performance levels with reduced computational demands. This technique enables faster deployment and inference while maintaining high accuracy, making it suitable for environments with stringent resource constraints.

Hyperparameter Tuning

Hyperparameter tuning is critical for improving model performance by determining the optimal collection of hyperparameters that govern the training process. Standard techniques include

- grid search, which thoroughly evaluates all potential hyperparameter combinations;

- random search, which randomly picks a subset of combinations

- Based on previous evaluations, bayesian optimization constructs a probabilistic model to steer the search.

Effective hyperparameter adjustment can significantly improve model performance and efficiency.

Optimization Tools

Tools such as TensorRT and Apache TVM help optimize AI deployment models. NVIDIA's TensorRT optimizes inference for deep learning models by using GPU acceleration and focusing on precision. Apache TVM, an open-source framework, provides a modular stack for optimizing and distributing models across multiple hardware platforms, allowing for efficient model execution. These tools improve performance, lower latency, and more efficient resource utilization in production environments.

Security and Compliance

Security is of utmost importance while developing any new product, software, or solution. Let’s talk about ensuring security in tailored AI models:

AI Security is Non-Negotiable

Ensuring AI security and compliance is vital, especially in industries that deal with sensitive or personal data, such as healthcare, banking, and critical infrastructure. AI systems must be protected from unauthorized access, data breaches, and misuse, as failures in these areas can have serious legal, financial, and reputational consequences. Implementing strong security measures is critical for ensuring the integrity and confidentiality of the data and AI models.

Data Encryption and Secure Handling

Encryption protocols are essential for protecting data in transit and at rest. Methods like AES (Advanced Encryption Standard) with a minimum of 256-bit keys are frequently employed to prevent unwanted parties from accessing saved data. Protocols like TLS (Transport Layer Security) encrypt data while it travels across networks, shielding it from manipulation. Secure handling also entails strict authentication procedures and access controls to protect data further.

Model Robustness and Adversarial Attacks

Maintaining the resilience of AI models requires defense against adversarial attacks, in which malicious inputs are crafted to trick the model into producing false predictions. Adversarial training, in which models are exposed to adversarial examples during training to learn to resist them, and defensive distillation techniques, which lessen models' sensitivity to minute perturbations in input data, are two tactics to counter such attacks. Regular input validation checks and adversarial sample testing can also improve model robustness.

Regulatory Compliance

Adhering to industry-specific regulations ensures that AI solutions comply with legal standards and protect user rights. For instance, GDPR (General Data Protection Regulation) mandates data protection and privacy for individuals within the European Union. At the same time, HIPAA (Health Insurance Portability and Accountability Act) sets standards for protecting health information in the U.S. PCI-DSS (Payment Card Industry Data Security Standard) focuses on securing credit card transactions. Compliance with these regulations requires implementing appropriate data handling practices, maintaining data subject rights, and ensuring transparency.

Auditability and Transparency

Accountability and regulatory compliance in AI decision-making depend on keeping audit trails and guaranteeing openness. To give a clear record of the system's activities, this entails logging all interactions with the AI system, including data inputs, model predictions, and reasoning behind decisions. Creating explainable AI models that provide insights into decision-making processes is another way to ensure transparency and give stakeholders confidence in the AI system's outcomes.

Security Tools and Frameworks

Programs like IBM AI Explainability 360 and Counterfit are essential to improving AI security and compliance. The IBM AI Explainability 360 array of algorithms and approaches for deciphering and elucidating AI model decisions upholds transparency and trust. Designed to test and secure AI systems against adversarial attacks, Counterfit allows businesses to evaluate and strengthen the defenses of their models. Using these tools may address security issues and ensure that compliance requirements for AI systems can be successfully met.

Leveraging Gen AI with Successive Digital for Your Custom Solutions

Successive Digital, a digital transformation company, offers a comprehensive suite of solutions, including digital strategy, product engineering, CX, Cloud, and AI/ML and Gen AI consulting services. We help companies continuously optimize business and technology that transform how they connect with customers and grow their business. Here’s how we helped a leading business in retail and eCommerce by curating a customized generative AI solution. The client sought to enhance its marketing efficiency by implementing a Generative AI solution for dynamic landing page content creation. The objective was to produce SEO-optimized text tailored to specific keywords, audience preferences, and brand tone, improving organic traffic, search engine rankings, and conversion rates. Challenges included automating keyword-based content generation, integrating with Jira for seamless content management, and optimizing meta titles and descriptions. The solution involved keyword intake and prompt optimization for generating relevant content, automating Jira ticket creation for content tasks, and linking educational content with products on the client's site. Utilizing the AWS ecosystem with Amazon Bedrock, AWS Lambda, and AWS API Gateway for backend development and employing the Claude AI model, the solution significantly increased productivity by 40% and business agility by 30% while ensuring robust performance and security.

Conclusion

We are starting a new era where generative AI powers the most prosperous and self-sufficient businesses. Businesses already utilize generative AI's fantastic potential to install, manage, and track intricate systems with previously unheard-of ease and effectiveness. By using this advanced technology's boundless potential, organizations can make more informed decisions, take prudent risks, and remain adaptable in quickly shifting market conditions. The applications of generative AI will increase and become more integral to our daily lives as we continue to push its bounds. With generative AI, businesses may achieve previously unheard-of levels of creativity, efficiency, speed, and accuracy. This gives them a decisive edge in today's fiercely competitive market. So what are you waiting for- get your custom gen AI solution designed today.

.avif)

.webp)

.jpg)