Every company, regardless of industry, uses software and applications to differentiate itself from the competition. All they want is to deliver exceptional digital experiences to customers. And who builds those applications and digital experiences? Developers. To achieve business agility within an organization where a developer can build and push changes multiple times a day, companies are now moving to cloud-native operating environments. These companies increasingly embrace cloud computing services and cloud-native tools to meet changing business needs.

A Cloud-native environment provides needed resources on-demand to design, test, deploy, and manage business applications. And this is driving organizations across all industries to use scalable, flexible, and easily updatable hybrid multi-cloud IT systems. In one of its reports, Gartner mentioned that more than two-thirds of spending on application development will go towards cloud tech in 2025, and more than 20% of applications are now being built in the cloud.

The future of online applications relies on cloud-native development as developers can build and run applications in modern, dynamic environments. It allows businesses to adopt new processes and technologies and be ready to respond to dynamic customer needs.

Since the intricacies of cloud-native development are so vast, in this blog post, we will discuss comprehensive and practical insights into developing applications that are optimized for cloud environments. We will also discuss the most advanced technologies, their benefits, and other supporting processes and methodologies businesses require to design, build, and deploy applications that fully harness the capabilities of cloud platforms and let them stay ahead of the competition.

Foundation of Cloud Native

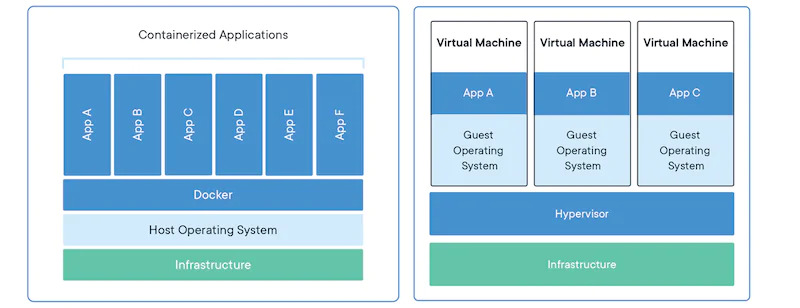

Increasing adoption of microservices architecture, containers, service meshes, immutable infrastructure, and declarative APIs exemplify cloud-native app development mechanisms. For example, the emergence of Docker containers simplifies virtualization at the OS (operating system) level. Google’s initiative to run Google’s containerized workloads with a container orchestration mechanism for 15 years and then donating its technology, or Kubernetes to the open source community in 2014 gave birth to Kubernetes or container orchestration adoption. Besides these, multiple processes, technologies, and tools laid the foundation for cloud-native development to flourish efficiently.

What is Cloud Native?

Cloud-native is an approach for building applications as microservices and packaging and running them on a containerized and dynamically orchestrated platform that completely leverages the cloud computing model – SaaS, PaaS, and IaaS. Cloud-native promotes developing applications as per use cases and deploying them without worrying about where. This evolving process allows companies to build and run scalable applications in modern, dynamic environments, including private, public, hybrid, and multi-cloud.

Cloud-native apps are built from scratch using cloud-native technologies, designed as loosely coupled systems, and optimized to exploit cloud advantages such as scale, performance, and high availability. Besides this, organizations use managed services and take advantage of continuous delivery to avail reliability and speed to market. Cloud-native development offers improved speed, scalability, and finally margin in developing and running modern applications.

What is the CNCF?

CNCF stands for Cloud Native Computing Foundation. It is an open-source foundation that encourages organizations to adopt cloud-native architecture for their applications by providing them with the required open-source tools and community support. Big names such as Google, IBM, Intel, Box, Cisco, VMware, etc. are part of the foundation. CNCF is behind developing and supporting critical cloud-native components such as Kubernetes and Prometheus.

According to the FAQ on why CNCF is needed, companies realize that they need to be a software company, even if they are not in the software business. Cloud-native development allows IT and software to move faster. Adopting cloud-native technologies and practices enables companies to create software in-house and enables stakeholders to partner closely with IT people, keep up with competitors, and deliver better services to their customers.

As scalability and flexibility are key components of cloud-native development, CNCF promotes using immutable infrastructure, microservices development, containers, and other principles as technical blocks to set up cloud-native architecture.

What is 12 Factor Cloud Native Application Development Methodology

Developers are moving apps to the cloud, and in doing so, they become more experienced at designing and deploying cloud-native apps. From that experience, a set of best practices, commonly known as the twelve factors, has emerged. Designing an app with these factors in mind lets you deploy apps to the cloud that are more portable and resilient when compared to apps deployed to on-premises environments where it takes longer to provision new resources.

However, designing modern, cloud-native apps requires a change in how you think about software engineering, such as configuration methods and deployment pipelines, when compared to designing apps for on-premises environments. Let’s explore what is the thought process behind the 12 factors apps principles:

-

Codebase

It promotes using version control systems such as Git that work as a centralized repository for codebase per microservice. It helps in executing a branching strategy effectively.

-

Dependencies

To bring clarity and control over the application’s dependencies, the 12-factor methodology emphasizes going with an explicit declaration of dependencies and keeping it isolated from the main codebase. The separation helps maintain clean code, and your application can run consistently across different environments, any issues related to specific versions of dependencies can be easily identified and addressed.

-

Configuration

This refers to the aspect of configuration management in 12-factor apps where developers are required not to hard-code values of an application like database connections. It emphasizes the need for flexible and dynamic configuration management that ensures applications can seamlessly adapt to different environments without requiring code changes.

-

Backing Services

As microservices-based architecture is crucial for cloud-native development, the 12-factor app emphasizes the abstraction of external resources and loosely coupled integration. This architecture allows for an easy replacement of maintenance of the resources while making complete architecture more robust, scalable, and resilient when dependent on external dependencies and services.

-

Build, Release, Run

As part of the 12 Factor App” methodology, separating the build and run stages allows the test and validation of the app during the development process to get deployed to production. It provides consistency and minimizes the “it works on my machine” problem, where code behaves differently in different environments due to variations in dependencies or configurations.

-

Processes

To leverage cloud native promises of scalability, fault tolerance, resource efficiency, and simplicity, the 12-factor app approach asks to develop and execute one or more stateless processes. Stateless processes are well-suited for modern cloud-native and microservices architectures that require to be agile, adaptable, and capable of handling dynamic workloads.

-

Port Binding

One of the key features of a modern application is that it exports services via port binding, making it more portable and isolated from the details of the underlying infrastructure. It doesn’t need to know where or how services like databases or messaging systems are hosted. This separation of concerns enhances flexibility, promotes portability, and makes changing or upgrading these services easier without impacting the application code.

-

Concurrency

To effectively meet the demands of modern web and cloud-native applications, applications should be designed to scale out using the process model. Cloud-native development is as much about teams, people, and collaboration as it is about technology.

-

Disposability

When you want your application to be resilient, it is important that it is designed to replace a failed or misbehaving process with a new one that can be up and running quickly. It is called a graceful shutdown that minimizes downtime and service interruptions by terminating a process cleanly, reducing the risk of data corruption and incomplete transactions.

-

Dev/prod parity

Since the 12-factor app promotes continuous deployment, it promotes keeping parity within the development, staging, and production environments. It reduces the gap in different environments or backing services such as the app’s database, queueing system, or cache.

-

Logs

The 12 Factor app treats logs as event streams and provides real-time visibility, enhances debugging and troubleshooting, supports scalability, and provides flexibility for integrating with a wide range of tools and services. Distributed and containerized applications particularly require threat logs as event streams.

-

Admin Processes

In cloud-native environments, maintenance tasks are performed consistently and reliably alongside the application. It is about automating and scripting maintenance activities, executing them within the runtime environment, and including them as part of the release process for a more streamlined and manageable development and deployment process.

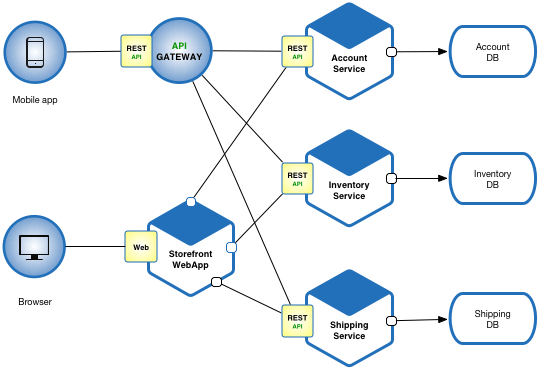

What Is Cloud Native Application Development?

Cloud-native application development is built upon the principles of DevOps, fostering a culture and environment that promote consistent building, testing, and releasing of software. Agile development practices are instrumental in making the software release process predictable and dependable to facilitate faster feedback. Let’s delve into these concepts more deeply to appreciate their significance.

-

Continuous Integration

Continuous Integration (CI) and Continuous Development (CD) encompass a set of guiding principles that empower development teams to deliver code changes more frequently and reliably. CI’s technical objective is to establish a consistent and automated approach for building, packaging, and testing applications. This consistency in the integration process encourages more frequent code commits, fostering enhanced collaboration and software quality.

-

Continuous Delivery/Continuous Deployment

Continuous Delivery extends the process initiated by Continuous Integration. CD automates the deployment of applications to various infrastructure environments, including development, quality assurance, and staging. It involves running various tests, such as integration and performance tests, before deploying to production. Continuous Delivery typically includes a few manual steps in the pipeline, while Continuous Deployment automates the entire process from code check-in to production deployment.

-

Orchestration

Containers enable the isolation of applications into lightweight execution environments that share the operating system kernel. Containers are highly resource-efficient, with Docker emerging as the standard technology in this realm. Since the number of containers grows over a period of time as the application grows, it requires an orchestration tool to manage and run containerized applications efficiently with high uptime. Cloud-native applications are typically deployed using Kubernetes consulting services, an open-source platform designed for automating the deployment, scaling, and management of containerized applications. Kubernetes has become the go-to operating system for deploying cloud-native applications.

-

DevOps

DevOps, derived from the amalgamation of “development” and “operations,” encompasses the organizational structure, practices, and culture necessary for enabling agile development and scalable, reliable operations. DevOps emphasizes a culture of collaboration, automation, and alignment between development and operations teams. Modern organizations often consolidate development and operational responsibilities within a single DevOps team, fostering a unified approach to development, deployment, and production operation responsibilities.

-

Elasticity – Dynamic Scaling

Cloud-native applications leverage the elasticity of the cloud to adjust resources dynamically during usage spikes. For instance, if a cloud-based application experiences a surge in demand, it can automatically allocate additional compute resources to accommodate the increased load. Once the demand subsides, these additional resources can be shut down, allowing the application to seamlessly scale as needed.

-

Site Reliability Engineering (SRE)

In an always-on world, companies that want their services up and running at all times are using SRE principles and practices as forethought. Google pioneered SRE to gain visibility into every facet of their services, nodes, clusters, and data centers, enhancing product velocity while maintaining release consistency. Adopting Site Reliablity Engineering Services involves creating incident playbooks and conducting practice runs to prepare teams for managing and mitigating inevitable incidents.

Henceforth, cloud-native development embodies a comprehensive approach that encompasses DevOps, continuous integration and delivery, orchestration with containers and Kubernetes, elastic scaling, and the principles of Site Reliability Engineering. These practices and technologies collectively empower organizations to build resilient, adaptable, and highly available cloud-native applications.

Benefits of Cloud Native Application Development

At its core, cloud-native development is all about teams, people, technologies, and establishing collaboration between all. Henceforth, it provides benefits to all stakeholders involved in application development. For example, companies avail competitive advantages in various ways, whereas developers receive better support for converting innovative ideas. Let’s explore them one by one.

-

Increased Efficiency

The execution of cloud-native development requires agile practices like DevOps since continuous delivery is the key factor of cloud-native. As it brings along DevOps, developers get to use automated tools and cloud services and work in a modern design culture to build scalable applications faster.

-

Reduced Cost

With a cloud-native approach, companies switch to Opex from the Capex model, resulting in long-term operational expenditure savings. This cost-saving of building a cloud-native solution might also benefit the company’s client.

-

Higher Availability

Companies can build resilient and highly available applications with a cloud-native development approach. It promotes feature updates that do not cause downtime, and companies can scale up app resources during peak seasons to provide a positive customer experience.

-

Faster Development

Developers save development time and achieve better quality applications with ready-to-deploy containerized applications with a cloud-native approach and DevOps practices. Without shutting down the app, they can push several daily updates.

-

Platform Independence

As the cloud provider handles hardware compatibility, developers build and deploy apps in the cloud with consistency and reliability. It saves developers time and effort used to spend on setting up the underlying infrastructure rather than delivering values in app development.

Challenges of Cloud Native Application Development

While great in theory and despite several benefits, implementing cloud native computing is not that easy or straightforward, especially if you are an enterprise with long-standing legacy systems or applications. Let’s explore the crucial challenges businesses face when they decide to adopt a cloud-native model.

-

Security

With cloud-native development comes a shared responsibility model. In this mode, cloud providers ensure the security of the cloud, and developers or businesses have to ensure the security of cloud applications. Understanding a new model for securing the application in the cloud is challenging itself.

-

Handling Data

There is a lack of technical expertise to handle data in the cloud. Professionals and their skills are more familiar with the legacy culture, and making a transition to the cloud hinders the speed of innovation.

-

Vendor Lock-in

If you were heavily invested in a specific platform or tool in the past, you might encounter constraints due to vendor lock-in now. While hyperscale cloud providers offer feature-rich and user-friendly platforms, they often come with the drawback of lock-in. In the end, the essence of cloud-native computing is to enable you to leverage the capabilities of hyperscale cloud providers while retaining the flexibility to explore multi- and hybrid-cloud architectures.

-

Cloud Native Concepts are Difficult to Communicate

Communicating cloud-native concepts can pose a challenge due to their inherent complexity, compounded by the abundance of choices available. It’s essential for executives to grasp the significance and intricacies of cloud-native solutions before committing to technology investments. Technical leaders often find themselves facing an uphill task when it comes to explaining concepts like microservices, containers, and others to executives.

-

High Operational and Technology Costs

The cloud presents substantial cost advantages by enabling organizations to pay solely for the computing resources they require. In most cases, the overall expense of using cloud services tends to be lower than the costs associated with the upfront investment required for purchasing, supporting, maintaining, and designing on-premises infrastructure. While achieving optimal cloud usage may present challenges, there are strategies available to help you accomplish this goal.

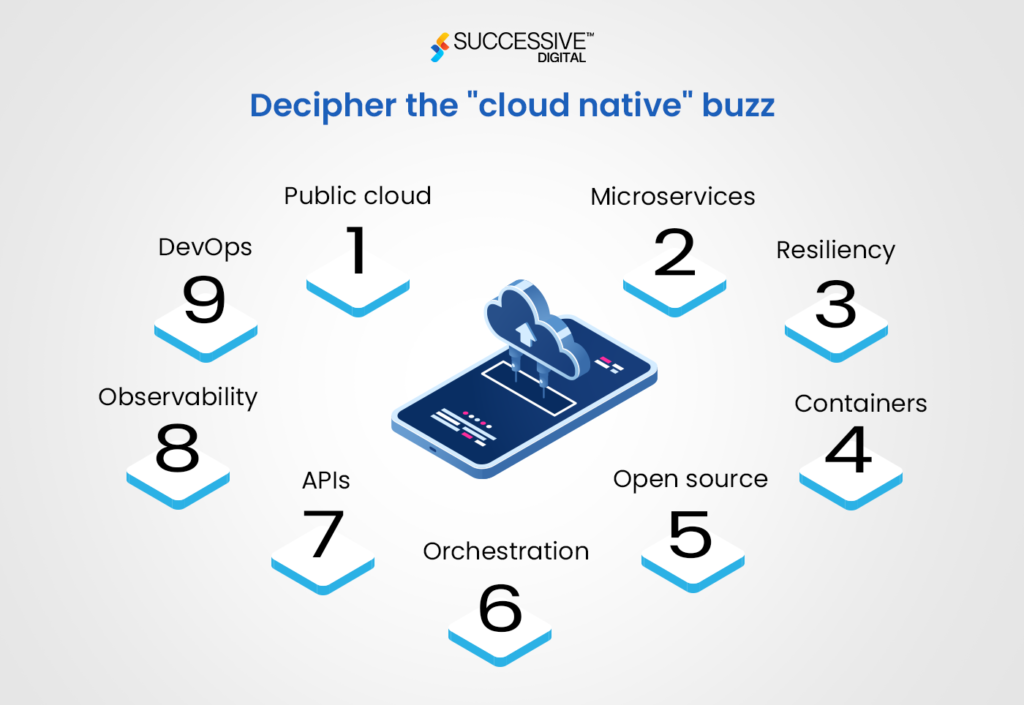

Architecture of Cloud Native Application Development

Cloud-native architecture is an amalgamation of application components that developers combine to build and run scalable cloud-native applications. The application components often include immutable infrastructure, microservices, declarative API, containers, and service mesh. Let’s understand them in detail below and their significance.

-

Immutable Infrastructure

Setting up servers manually is a time-consuming process. Since cloud computing allows infrastructure to be set up through declarative methods, cloud-native encourages the use of immutable infrastructure, where servers that host cloud-native applications remain unchanged after deployment. In this new paradigm, the old server is discarded, and an app is moved to a new high-performing server when more computing resources are required. It turns cloud-native deployment into a predictable process.

-

Designed As Loosely Coupled Microservices

Microservices are small, self-contained software modules that collaborate to create fully functional cloud-native applications. Each microservice addresses a specific, narrowly defined problem. These microservices operate with loose coupling, meaning they are autonomous software elements that interact with each other. Developers can modify the application by making changes to individual microservices written in different languages of their choice. This approach ensures that the application remains operational, even if one of the microservices encounters an issue.

-

Developed With Best-of-breed Languages And Frameworks

In a cloud-native application, each service is built using the programming language and framework that are most suitable for its intended functionality, as mentioned above. Cloud-native applications are characterized by their polyglot nature, where services employ a diverse range of languages, runtimes, and frameworks. For instance, developers might create a real-time streaming service utilizing WebSockets, implemented in Node.js, opt for Python to construct a machine learning-based service, and choose spring-boot for exposing REST APIs. This granular approach to developing microservices empowers developers to select the optimal language and framework for each specific task.

-

Centered Around APIs For Interaction and Collaboration

An Application Programming Interface (API) is a mechanism employed by two or more software programs to share information. In cloud-native systems, APIs play a crucial role in integrating loosely connected microservices. Instead of outlining the step-by-step process to attain a particular outcome, an API provides information about the data a microservice requires and the results it can provide.

-

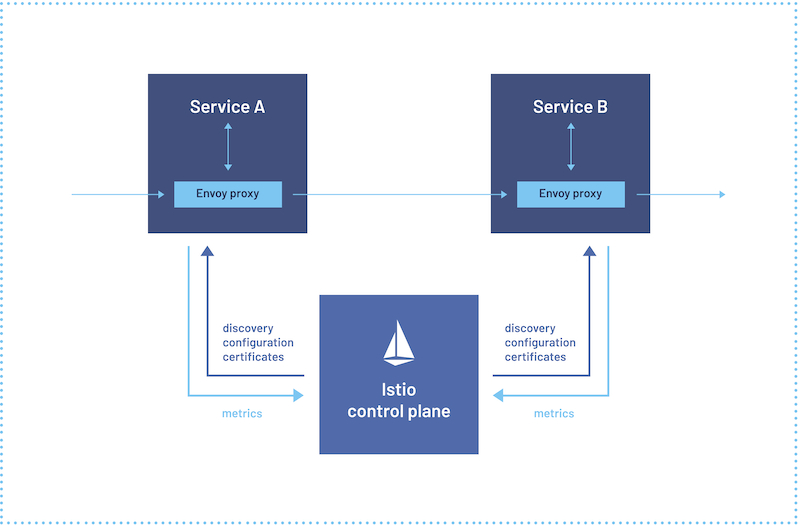

Service Mesh

Service mesh is a software layer in the cloud infrastructure that manages the communication between multiple microservices. Developers use the service mesh to introduce additional functions without writing new code in the application.

Image source: Istio

One of the best examples of service mesh is Istio, which is widely adopted with microservices architecture and Kubernetes deployments.

-

Containers

Containers represent the most granular computational entity within a cloud-native application. They serve as software elements that encapsulate the microservice code along with necessary files within cloud-native systems. Containerization of microservices enables cloud-native applications to operate independently of the underlying operating system and hardware. Consequently, software developers gain the flexibility to deploy cloud-native applications in on-premises environments, on cloud infrastructure, or across hybrid cloud setups. Containers are instrumental in packaging microservices along with their associated dependencies, encompassing resource files, libraries, and scripts essential for the primary application’s execution.

-

Serverless – Compute Without Compute

Serverless computing represents a cloud-native paradigm in which the cloud provider assumes complete responsibility for overseeing the underlying server infrastructure. Developers opt for serverless computing due to its ability to automatically scale and configure the cloud infrastructure to match the needs of the application. With serverless architecture, developers are solely charged for the computing resources consumed by the application. Furthermore, the serverless framework autonomously deallocates compute resources once the application ceases to run.

-

Resiliency at the Core Of the Architecture

As per Murphy’s law, which states that “Anything that can fail will fail,” this principle applies to software systems as well. In a distributed system, failures are an inherent part of the landscape. Hardware components can malfunction, and the network may experience temporary disruptions. On rare occasions, even an entire service or region might face significant disruptions, necessitating thorough planning. Resilience, in the context of cloud-native development, refers to the system’s ability to bounce back from failures and maintain its functionality. It’s not about preventing failures but rather about responding to them in a manner that prevents downtime or data loss. The primary objective of resiliency is to restore the application to a fully operational state after a failure occurs. Resiliency encompasses the following key aspects:

- High Availability (HA): This entails ensuring that the application can continue running smoothly without experiencing substantial downtime, even when faced with occasional failures.

- Disaster Recovery (DR): DR focuses on the application’s capability to recover from infrequent yet significant incidents, such as non-transient, large-scale failures that may impact an entire region or service.

One of the primary strategies to enhance cloud-native application resilience is through redundancy. Achieving HA and DR involves implementing measures such as multi-node clusters, deploying applications across multiple regions, data replication, eliminating single points of failure, and implementing continuous monitoring.

Importance of DevSecOps in Cloud Native Application Development

Whether you’re streamlining processes like software builds, testing, or deployment, DevOps teams collaborate closely, sharing responsibility for the entire lifecycle. The adoption of DevSecOps takes this collaboration a step further, embracing a “shift left” approach that involves security teams right from the beginning of the DevOps pipeline. DevSecOps allows teams to adapt to a proactive approach for integrating security measures that enhance the overall security of the application development process, resulting in quicker, safer, and more cost-effective software delivery.

It’s essential to note that while part of DevSecOps involves encouraging developers to write more secure code, it’s not about burdening developers with the majority of security tasks. Instead, “shifting left” implies moving security activities and policy enforcement closer to developers. It involves establishing the necessary guardrails, platforms, and tools to facilitate the creation of secure applications. For instance, a software bill of materials (SBoM) maintains a comprehensive list of an application’s dependencies, including third-party services, software packages, open-source software stacks, and codebases. These dependencies are managed in an automated, machine-readable manner. This approach simplifies the tracking of application changes and the identification and resolution of vulnerabilities. In a fully automated CI/CD pipeline, DevSecOps ensures fault-free releases each and every time to production environments.

How Is Cost-Efficiency Maintained Through Microservices?

Microservices architecture is the core of cloud-native development, but what makes it unique is developing applications efficiently with a modular structure. The modular structure of the application enables an efficient development process by enabling engineers to work on multiple facets of the same platform simultaneously. Microservice architecture avoids “coupling” or writing code that conflicts with other parts of an application and focuses on loosely coupled and independent services.

Developing loosely coupled microservices is less time-consuming and can be developed using programming languages that developers are familiar with. Microservices applications provide speed to market, scalability, better resource allocation, and ongoing optimization while reducing IT and cloud platform costs.

How Does Cloud Native Application Development Ensure Scalability And Resilience?

Cloud-native development is a comprehensive approach, and here are a few methods which are used to ensure the scalability of cloud-native applications.

- Auto-scaling: Cloud-native platforms often offer auto-scaling capabilities. This means that resources, such as virtual machines or containers, can automatically increase or decrease based on demand. When traffic spikes, additional resources are provisioned to handle the load, ensuring that the application remains responsive and available.

- Cloud Services: Cloud providers offer a range of services that support scalability and resilience. These include load balancers, content delivery networks (CDNs), and managed databases. Load balancers distribute traffic evenly across multiple instances to prevent overloading, CDNs cache content globally to reduce latency, and managed databases offer automatic failover and backup options.

- Infrastructure as Code (IaC): Cloud-native development often relies on IaC principles. Infrastructure is defined and managed through code, making it easy to replicate, scale, and recover resources as needed. This approach ensures that the infrastructure itself is resilient and can be quickly recreated if necessary.

Here are some of the strategies used to maintain resilience with cloud-native applications.

- Retry transient failures

- Load balance across instances

- Degrade gracefully

- Throttle high-volume tenants/users

- Use a circuit breaker

- Apply to compensate transactions

- Testing for resiliency

What Should You Know About ROI and TCO Considerations When Going Cloud Native Application Development?

Since cloud native development involves the OpeX model, it is way different than CapeX or managing the expenses required to run servers on-premises. In this case, it is important to understand that when you switch to cloud-based development, how the process become different to traditional model and what impact it would make to run and own an application build and deployed on cloud.

What is Cloud ROI?

Cloud ROI, within the context of cloud economics, assesses the influence of a cloud investment on an organization. ROI, which stands for return on investment, serves as a success indicator for most businesses, indicating that a particular business decision has favorably affected the organization’s financial performance. For instance, transitioning to a public cloud provider reduces capital expenditures but increases monthly expenses. Some key factors when calculating cloud ROI include:

- Productivity

- Multi-tenancy

- Pay as you go

- Provisioning time

- Capital spending reduction

- Access to new market

- Cloud risk management

What Is Cloud TCO And Its Components?

TCO, or total cost of ownership of using cloud computing, varies dramatically based on different needs and complexities a company experiences. However, the usual factors that contribute to the TCO include cloud and legacy applications. Other than that, TCO also depends on whether you develop your applications with an in-house team or have outsourced it that requires additional services. The below table demonstrates the TCO experienced by companies for managing varied workloads needs on different setups.

| Cloud | On-Premises | |

| Setting Up |

|

|

| Recurring Expenses |

|

|

| Contingency |

|

|

| Retirement |

|

|

Top Cloud Providers And Their Offerings

Though there are many cloud providers in the market currently, the ones who make a mark in the industry are AWS from Amazon, Azure from Microsoft, and GCP from Google. Let’s grab a detailed overview of these companies, their data center availability across the globe, and their core offerings.

AWS Cloud-Native Services

Amazon Web Services (AWS) is the largest cloud service provider globally. It is a cloud subsidiary of eCommerce giant Amazon.com. Currently, AWS offers more than 200 fully featured cloud services for compute, storage, and database.

At present, AWS is actively serving customers with 26 regions and 84 availability zones strategically positioned across the United States, AWS GovCloud (US), the Americas, Europe, Asia Pacific, and the Middle East & Africa.

Successive Digital is the AWS Advanced Consulting Partner, and we specialize in helping businesses leverage AWS’s extensive suite of services to drive innovation and achieve their digital transformation goals.

EKS: EKS stands for Elastic Kubernetes Services, which is a managed Kubernetes service that allows you to deploy modern Kubernetes applications on AWS and on-premises.

Lambda: AWS Lambda is an event-driven compute service, also known as serverless compute, that lets you run code without provisioning or managing servers and is compatible with almost all essential AWS services.

RDS: It is a Relational Database Service that provides managed SQL databases.

Azure Cloud-Native Services

Within Microsoft Corporation, the Intelligent Cloud division houses Azure, the second-largest global provider of cloud services. Microsoft Azure, a flagship offering, provides a unified hybrid cloud experience, enhances developer efficiency, offers robust artificial intelligence (AI) capabilities, and prioritizes security and compliance.

At the moment, Microsoft Azure boasts an extensive network encompassing 60 regions and 116 availability zones that are actively serving customers. These regions and availability zones are distributed throughout the United States, Azure Government (U.S.), Americas, Europe, Asia Pacific, as well as in the Middle East & Africa.

AKS: AKS or Azure Kubernetes Service is a managed Kubernetes service by Microsoft Azure that allows you to deploy production-ready Kubernetes applications without worrying about the underlying infrastructure and management.

Azure Functions: Microsoft’s serverless compute service is named Azure Functions. It allows businesses to run event-driven code without managing infrastructure.

Cosmos DB (Azure Cosmos DB): Cosmos DB is a globally distributed, multi-model database service offered by Microsoft. It is ideal for modern, globally distributed applications.

Google Cloud-Native Services

Google Cloud Platform (GCP), a subsidiary of Alphabet Inc., holds the position as the third-largest global provider of cloud services. It specializes in delivering enterprise-ready cloud solutions. GCP empowers developers to create, assess, and launch applications on its extensive and adaptable infrastructure, all while harnessing the platform’s robust features in areas such as security, data management, analytics, and artificial intelligence (AI).

Presently, Google Cloud operates an impressive network comprising 34 regions and 103 availability zones. These strategically located regions and availability zones span across the United States, the Americas, Europe, and the Asia Pacific region.

GKE: Google’s managed Kubernetes service is known as Google Kubernetes Engine (GKE). It is the industry’s first fully managed Kubernetes service with full Kubernetes API, 4-way autoscaling, release channels, and multi-cluster support.

Cloud Run: Cloud Run is a managed compute platform from Google. This native service allows you to run containers directly on top of Google’s scalable infrastructure.

Bigtable: Bigtable is Google’s NoSQL Big Data database service that is built to scale to billions of rows and thousands of columns to store terabytes or even petabytes of data storage.

Cloud Native Application Development Use Cases Industry Wide

Industries across the world are rethinking their enterprise processes with cloud-based resources. According to Statista, re-architecting proprietary solutions for microservices adoption is one of the top objectives among enterprises, followed by testing and deploying applications with automated CI/CD pipelines.

Besides, managing or enabling a hybrid cloud setup, deploying business solutions in different geographies, and using best-of-breed cloud-native tools are some other cloud trends adopted across industries. Let’s explore the top industries worldwide moving and managing cloud-native applications for better availability, digital experiences, scalability, and performance.

Use of Cloud Native Application Development in Healthcare Industry

Healthcare has historically been slower in adopting advanced technologies like cloud infrastructure and related data technologies in comparison to other industries. Although they have had the potential to improve internal efficiency and enhance the quality of patient care, it was highly regulated, keeping the sector companies like hospitals and providers from transitioning from legacy systems to embrace real digital transformation.

Since COVID-19 happened and a distant free solution became a need of the hour, the healthcare industry’s focus shifted drastically from legacy solutions to digital solutions overnight. Two prominent solutions that emerged at the time were telemedicine and remote patient monitoring. Let’s explore how these two segments have changed forever.

- Telemedicine

Pandemic or no pandemic, wearable technology, and advanced AI have made the adoption of telehealth applications common for patients and providers. Healthcare institutions have invested heavily in telehealth infrastructure and created multiple portals on hybrid and multi-cloud to enable remote patient care. Since healthcare solutions are delivered through mobile devices, telehealth applications deliver preventative care, better access, and less spread, making telehealth worth pursuing.

- Remote patient monitoring

Healthcare leverages cloud-native to enable medical devices that facilitate remote patient monitoring and allow healthcare providers to remotely monitor patient’s conditions. Wearable technology and IoT play a crucial role in making remote patient monitoring and core vitals for human health seamless. By leveraging edge computing and data transmission, cloud-native powered-through telehealth apps offer real-time healthcare monitoring and prevention and customized patient care.

Cloud Native Application Development in Finance

The shift to cloud-native development and infrastructure is reshaping the future of the finance industry. Cloud-native empowers financial organizations to innovate at speed and makes a genuine difference in an ever more crowded market. Two of the significant cloud-native use cases for financial companies are real-time fraud detection and automated trading. Let’s explore these key aspects of finance and understand how it is leveraging cloud-native solutions.

- Real-time fraud detection

Financial fraud is a growing concern in today’s time, and cloud-native application development can help prevent it by empowering organizations with real-time fraud detection systems. Since real-time fraud detection systems process data at scale, cloud computing platforms not only support infrastructure where the processing data can scale to the amount of data to extract insights but also offer streaming infrastructure and tools such as Amazon Kinesis, Google Pub/Sub, and others that can efficiently handle real-time transaction data.

- Automated trading platforms

Trading companies have used cloud native resources in multi-facet trading solutions, such as in the creation of order management systems, execution management systems, and surveillance and audit solutions. Having access to the cloud gives them room to experiment and create prototypes. Real-time alerting and an audit trail are leveraging cost-effective data-storage capabilities. Pattern recognition on machine learning to identify trading behavior. Cloud-native environments and resources have also been helpful in backtesting that allows customers to develop trading algorithms. Customers can leverage historical data to build an algorithmic trade strategy within a simulated matching environment with an automated process that sends the model to compliance for review. If approved, the strategy can go right into production.

Use Case of Cloud Native Application Development in Retail Industry

From innovative customer experiences to scaling to meet demand spikes, the cloud emerges as an ideal platform for retail transformation. Cloud-native infrastructure enables retailers not only to provide the kind of buying experience today’s buyers expect but also to create innovative interactive experiences that draw customers in and engage their attention, leading to increased sales and repeat visits. Two of the crucial use-case scenarios where cloud-native architecture is helping retail and consumer goods companies include:

- Personalized customer experiences

The fundamental forces reshaping the landscape of retail are the rise of fast-changing consumer preferences, increasing competition from both small and mobile startups, and the always-on, buy-anywhere expectation of today’s customers. Cloud-native retail applications not only allow customers to shop the way they want but also allow businesses to deal with massive amounts of data related to customer engagement patterns and decide further strategies. It is a cloud that is making AI and ML integration possible within consumer-facing applications, enabling customers to receive personalized suggestions on every purchase based on their personal preferences.

- Inventory optimization

Cloud allows companies to track their inventory via a web browser on virtually any mobile device or desktop. Companies are getting better accessibility irrespective of their location. With accessibility, these companies are also leveraging sound security and saving costs on the maintenance of servers.

Cloud Native Application Development for Manufacturing Industry

Manufacturing is yet another industry that has leveraged cloud-native solutions to bring agility to its operations and accelerate 4.0 initiatives. Cloud offers vast data storage, processing capabilities, high availability, and performance to the industry. Two major areas where cloud computing is helping manufacturing companies include:

- Predictive maintenance

The manufacturing industry is leveraging cloud technologies to implement remote monitoring and maintenance systems, transforming traditional maintenance practices into predictive maintenance. IoT devices and edge computing are connected to the cloud, allowing real-time data collection from equipment, sites, and plants and enabling stakeholders to monitor performance, detect issues, and schedule preventive maintenance. The innovative solution is helping companies reduce downtime, extends equipment lifespan, and optimizes maintenance cost. Since data is also being stored in cloud data centers, manufacturers are also leveraging predictive analytics, anticipating equipment downtime, and taking proactive measures for uninterrupted production.

- Supply chain optimization

Since supply chain plays a crucial role in manufacturing, cloud technologies empower the manufacturing industry by streamlining supply chain management. Application developed on the cloud allowing manufacturers to track and monitor inventory levels, supplier performance, and logistics in real-time. Companies leveraging these applications are getting better visibility into the processes and becoming efficient in forecasting, inventory management, and order fulfillment. Since cloud-based apps and platforms ensure availability to any number of users and from any location, manufacturers are experiencing better collaboration with suppliers, distributors, and partners. It is helping the industry in smooth operations and minimizing disruptions in the supply chain.

What Does A Future Look Like For Cloud Native Application Development?

No industry has remained that has not explored cloud computing and cloud-native development in one way or another. While distributed applications, automation, orchestration, and observability have become de-facto to leverage cloud promises, innovation continues within the cloud and with cloud adoption. In the near future, there are a few trends to upsurge in the modern application development and deployment paradigm. One is the unification of AI and cloud, and the second is cloud native adoption in edge computing.

-

Unification of AI and Cloud

The future of cloud-native lies in integration with AI and machine learning. Simply put, businesses will benefit from AI and cloud integration. These two pillars are propelling businesses forward in multiple ways beyond IT.

While the cloud computing market is projected to double from its current size to $947 billion by 2026, the AI market is slated to grow more than five times to $309 billion.

Rather than viewing the two as competing markets, enterprises need to embrace the two and how they could help in ever-growing innovation.

The symbiotic connection between AI and cloud computing primarily revolves around automation. AI implementation streamlines routine processes, enhancing efficiency and allowing IT professionals to channel their expertise into more innovative development endeavors.

It’s evident that these technologies mutually influence each other in numerous ways. Investment in the cloud is driving faster adoption and higher spending on AI, resulting in full-scale deployments of AI – in fact, a Deloitte study found that 70% of companies get their AI capabilities through cloud-based software, while 65% create AI applications using cloud services.

Tom Davenport, President’s Distinguished Professor of IT & Management at Babson College, aptly notes, “The cloud has proven to be an excellent distribution channel for algorithms.” All three major cloud providers have made a collection of algorithms accessible, significantly simplifying AI implementation.

The unification of AI and the cloud enables automated processes such as data analysis, data management, security, and decision-making. AI’s capacity for machine learning and unbiased data interpretation enhances efficiency across these processes and offers substantial cost savings for enterprises.

Whereas applications of AI in cloud computing free developers from the job of building and managing a separate infrastructure for hosting AI platforms. Instead, they can utilize preconfigured setups and models for testing and deploying AI applications.

-

Edge Computing

Edge computing is another popular aspect or field where we would expect to see greater cloud-native architecture adoption in the near future. As edge computing stands for bringing compute and storage together to the edge location for data storage and processing for better analysis, it also solves problems that exist when data is moved to a centralized location – usually because the sheer volume of data makes such moves cost-prohibitive technologically impractical or might otherwise violate compliance obligations, such as data sovereignty.

But how is cloud-native development significant to edge computing, and what are its advantages?

Edge computing architecture is creating a new internet and has become the only way to reduce the time to insight. Edge computing architecture lowers latency, deals with data even when there is insufficient bandwidth, decreases costs, and handles data sovereignty and compliance.

While cloud computing leverages centralization and economies of scale to meet business needs, edge computing needs a new paradigm. In edge computing, where hardware and software are spread across hundreds or thousands of locations, the only feasible way to look after these distributed systems is through introducing standardization and automation.

Though distributed computing systems are not new to IT, the scale and complexity demanded by edge computing are novel.

How Does Cloud Native Make Edge Computing Feasible?

Since cloud native technologies were born in the cloud, the operating and business paradigms they enable will make edge computing possible. Cloud-native brings with it standardization, like immutable infrastructure and declarative APIs, combined with robust automation to create manageable systems that require minimal toil.

Edge computing must leverage this standardization and automation to enable business use cases and make them operationally and financially viable for companies. Moreover, edge computing needs to leverage declarative API and built-in reconciliation loops empowering Kubernetes – which is the core of the cloud native ecosystem. The above two features make kubernetes perfectly suited to handle edge computing requirements.

- Utilizing a declarative API offers a uniform interface for overseeing hardware and software lifecycle across various infrastructure setups and geographical locations. Instead of reconfiguring computing resources and applications for each specific scenario or location, they can be developed once and then deployed multiple times. This approach enables businesses to efficiently expand their operations globally, ensuring they can cater to their customers wherever they may be.

- Secondly, the reconciliation loops automate manual processes, establishing a zero-touch environment characterized by self-healing infrastructure and applications. By harnessing Kubernetes for standardizing and automating both infrastructure and applications at the edge, organizations can achieve scalability through software rather than relying on human intervention. This shift towards software-driven scalability paves the way for exploring innovative business models previously considered economically unviable.

By 2024, the edge computing market is expected to be worth over $9.0 billion, with a compound annual growth rate of 30%.

Referring to the above chart shared by TechTarget, only 27% of respondents have already implemented edge computing technologies, while 54% find the idea intriguing. However, they didn’t know how standardization and automation could be achieved to leverage edge computing advantages.

Conclusion

As a company’s whole IT and datacenter requirements have been replaced by cloud-native architecture and its components, it has become pivotal for them to adopt a new paradigm to come into the mainstream if they have not initiated it yet or to be competitive in the market. Future innovations like AI algorithms and edge computing will happen on cloud-native solutions, and even the evolution of such innovations will expand cloud-native offerings and capabilities to accommodate untapped opportunities, it is easy to predict that this is the right time to jump on cloud native development.