The momentum behind AI adoption shows no signs of easing, but a critical question remains unanswered: are enterprises modernizing their infrastructure for what AI is becoming, or for what it used to be? As hybrid architectures increasingly define the enterprise standard, organizations that fail to scale AI effectively risk falling behind competitors who are already reshaping industries through agent-driven intelligence and large-scale customer experience transformation. The real challenge is no longer experimentation - it is execution. In an economy where AI is expected to unlock unprecedented value, the difference between stalled pilots and scaled innovation will define long-term competitiveness.

These are no longer abstract concerns. Enterprise data and industry benchmarks point to a widening gap between organizations that operationalize AI and those that remain trapped in proof-of-concept cycles. Infrastructure readiness, not model capability, is emerging as the decisive factor.

- Deloitte’s research indicates that 74% of enterprises are already achieving ROI at or above expectations from advanced generative AI initiatives, reinforcing that scalable, production-ready infrastructure has become a baseline requirement rather than a future investment.

- BCG’s 2024 analysis highlights a persistent execution gap, with 74% of organizations struggling to translate AI initiatives into enterprise value and only 26% successfully moving beyond pilot stages - signaling growing urgency for hybrid architectures that integrate MCP-based orchestration with cloud-native platforms such as Amazon Bedrock.

- PwC estimates that AI could contribute up to $15.7 trillion to global GDP by 2030, driven largely by productivity gains that depend on resilient, scalable AI platforms capable of supporting enterprise-wide deployment.

- Forrester’s latest findings show accelerating commitment at the leadership level, with 67% of AI decision-makers planning to increase generative AI investment over the next year, further elevating demand for enterprise-scale deployment platforms like Amazon Bedrock.

Firms across every industry are advancing the boundaries of AI, yet one of the most difficult tasks is scaling AI workloads in the modern world. Traditional MCP server set-ups are usually characterized by limited compute capabilities, rigid infrastructure, and expensive costs, which serve as a bottleneck to slow innovation. As the size of AI models and enterprise demands grow, the need to scale MCP servers with AWS to a customizable, cost-efficient, and expandable enterprise AI infrastructure has never been greater.

The trick lies in combining the raw performance of the MCP servers and Amazon Bedrock cloud-native scalability. MCP servers provide businesses with high-performance control and stability, compared to Bedrock with the agility of serverless deployments, enabling faster AI experiments and massively roll out models. Together, they create a cross-functional foundation that helps businesses to accelerate AI innovation with AWS and overcome challenges of the traditional configurations.

What Are AWS MCP Servers?

AWS MCP Servers are high-performance Model Context Protocol (MCP) servers designed to integrate AWS-specific intelligence into AI-based code helpers. Unlike traditional language models that utilize pre-trained data exclusively, these servers offer contextual advice and templates that can be put into action, depending on AWS best practices, architecture, and security guidelines.

All AWS MCP Servers are domain-specific, like Infrastructure as Code (IaC) with the AWS CDK, Amazon Bedrock integration, or knowledge management. When put together, it forms a holistic environment, allowing developers to design cloud-native applications, with efficiency and security as the new frontiers.

Simple Analogy

Imagine building a house. You have:

- Knowledgeable architects

- Engineers that are aware of the structure.

- Wiremen

- Budget analysts who deal with cost.

MCP Servers take on such roles in the realm of AI code assistants. They give the AI helper the correct background knowledge about your code, architecture, cost, etc., allowing the helper to make more helpful recommendations. They can be viewed as specialized AI assistants, each of which focuses on a specific area of a software project.

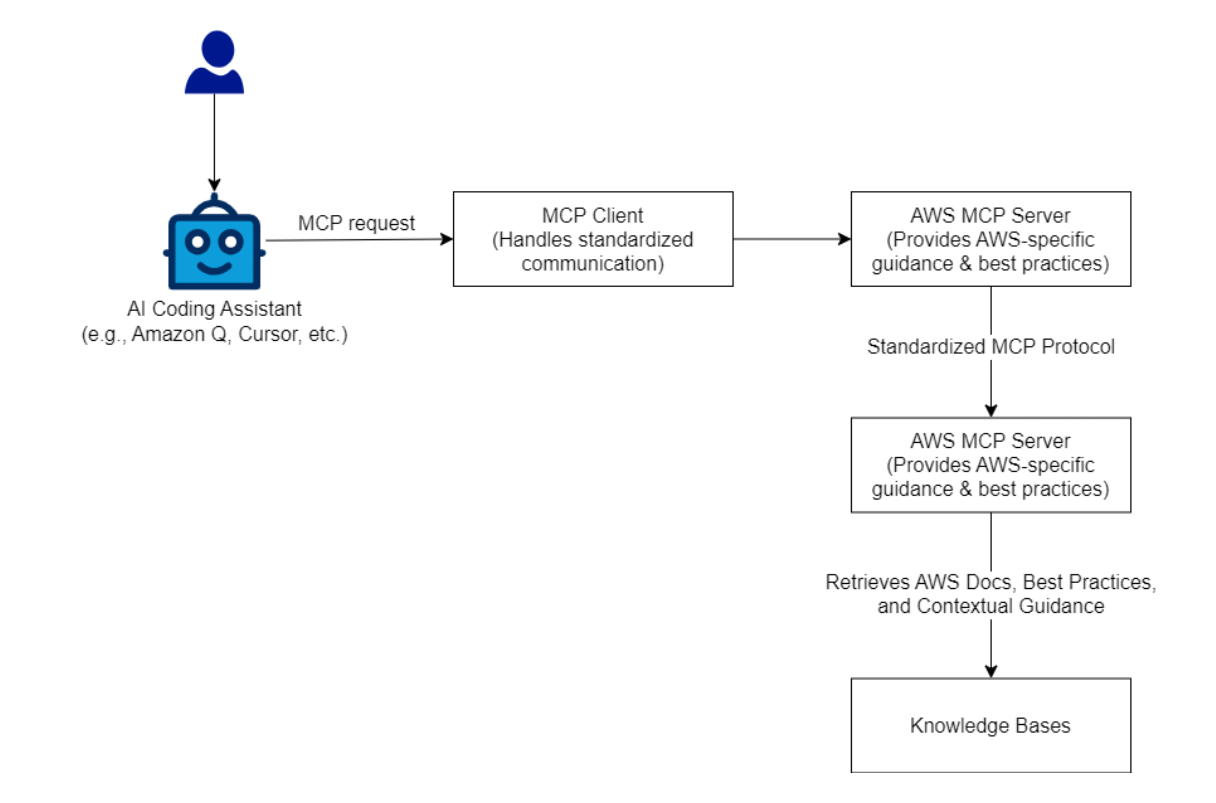

How It Works:

- AI Coding Assistant (e.g., Amazon Q, Cursor) forwards a request through MCP.

- MCP Client is an intermediary, which ensures standard communication.

- The request is processed by AWS MCP Server which retrieves relevant AWS documentation, best practices, and context.

- Knowledge Bases are drawn on by the server.

- The response is retransmitted to the AI coding assistant, assisting programmers in seamlessly incorporating AWS best practices.

Also read: Successive Digital Becomes an AWS Advanced Consulting Partner

Why AWS MCP Servers Matter

Today cloud development is not just about proficient code writing, but it requires extensive knowledge of service configurations, cost-effectiveness, security compliance, and scalable architecture design. Even experienced developers spend a lot of time on best practice research and on finding their way through complex service integrations.

AWS MCP Servers overcome these problems by:

- Offering live, contextual directions specific to the AWS services.

- Automating code monotony, e.g. secure default, efficient resource settings.

- Incorporating AWS Well-Architected Framework principles into the first line of code.

- Enhancing the compliance with the AWS Well.

- Architectural Framework of the very first line of code.

- Minimizing human error, allowing developers to create faster, more dependable solutions.

Core Capabilities of AWS MCP Servers

The following is a list of what AWS MCP Servers offer:

- AI-Driven AWS Expertise - Makes general-purpose LLMs AWS experts through dynamic retrieval of guidance, rather than depending on fixed training data.

- Integrated Security and Compliance - Imposes best practices to IAM roles, encryption, monitoring and auditability without manual configuration.

- Cost and Resource Management Optimization - Early-stage insights assist in avoiding over-provisioning and aid in cost-effective infrastructure design.

- One-Click Access to Standard Patterns and Templates - Offers ready-to-operate AWS CDK constructs, Bedrock schema templates, and so on, saving time in the manual implementation of them.

- Smooth External Knowledge Retrieval - Invokes the open Model Context Protocol in order to enable the LLMs to gain access to external knowledge safely, without uncovering sensitive data.

Domain-Specific MCP Servers to enhance AWS Development

Some domain-specific MCP servers have been released by AWS:

Servers can operate as a single worker or in collaboration, based on the nature of the development workflow.

Static Cloud Automation to Agent-Driven Systems Architecture

Enterprise adoption of enterprise AI has reached a stage whereby it is no longer enough to use the traditional cloud automation models. With the growing shift of AI workloads to constantly executing decision systems, rather than pipelines with single-purpose, infrastructure itself grows to be dynamic, state-aware, and context-specific. It is at this point where most organizations fail in their attempt to implement an enterprise AI solution not because of the quality of the model, but because of an architectural mismatch.

Traditionally, cloud solutions were based on deterministic automation: scripts, Infrastructure-as-Code templates, and CI/CD pipelines. Such strategies are effective with fixed systems but fail where AI agents and AI assistants need to maintain a constantly changing infrastructure due to real-time signals, user behavior, and operational constraints.

This has created an impetus towards digital transformation solutions in which infrastructure is considered an intelligent system. AWS MCP servers, based on the Model Context Protocol, add the very layer that remains lacking; an intent-based orchestration plane that is governed and exists within the AWS cloud. To any aws consulting partner providing digital engineering services, MCP is an essential architectural development and not a tooling addition.

MCP-Driven Cloud Architecture for Enterprise-Scale AI Systems

The new generation enterprise AI systems comprise several layers that interact with each other: reasoning models, orchestrating logic, data platform, and execution environment. The absence of a mediation layer causes AI systems to either be over-privileged (making them risky) or under-utilize infrastructure (making them inefficient).

MCP servers address this as a structured intelligence-execution contract.

With an MCP-enabled architecture, AI agents do not call aws services directly. Rather, they provide structured intent via AWS MCP, which examines:

- Contextual state

- Permission boundaries implemented through AWS Identity and Access Management (IAM).

- AWS best practices aligned with operational constraints.

- Organizational guardrails defined by platform teams

This separation dramatically reduces coupling between AI logic and cloud implementation, enabling platform teams to evolve cloud solutions independently of model behavior. This decoupling is critical to enable organizations that have embarked on digital transformation solutions to scale safely across business units and geographies.

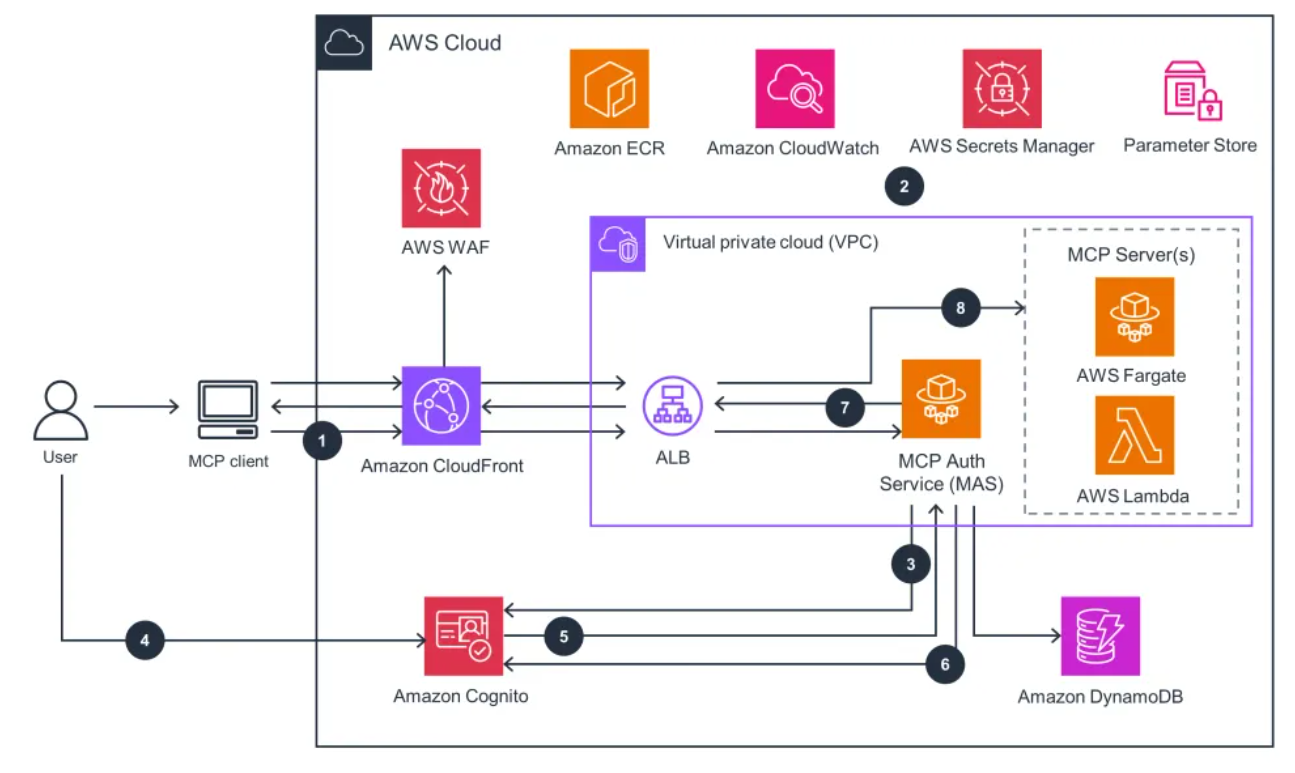

End-to-End Reference Architecture for AI Workloads Powered by AWS MCP

On the surface, AI assistants and AI agents are reasoning systems. They create intents, not infrastructure instructions. The purpose is defined using the Model Context Protocol, answering the foundational question: what is model context protocol in practical terms.

AWS MCP servers are positioned directly below this layer, acting as a policy-conscious orchestration point. They encode intent into managed execution routes, invoking AWS services when security, cost, and operational policies are checked.

Under MCP, the aws cloud execution layer consists of compute services, data services, and integration services e.g. AWS AppSync, Amazon Cognito and DynamoDB, which are frequently used to power real-time analytics systems that feed continuous signals back to AI systems.

Lastly, the governance is exercised through AWS CloudTrail, centralized audit logging, and IAM policies- ensuring all autonomous actions are observable, attributable, and reversible.

Enabling Elastic AI Workloads Across Training, Inference, and Analytics

Distributed Model Training at Scale

Modern model training is no longer a batch training. Enterprise-scale AI training is iterative, continuous and data-driven, whereas AWS MCP allows AI agents to dynamically request, resize, and cancel training environments without integrating infrastructure reasoning in model pipelines.

The enforcement of the AWS best practices with the help of MCP workflows helps organizations to prevent the most frequent reasons of the enterprise AI solutions failure, such as over-provisioning and cost spikes that are impossible to control.

High-Throughput, Policy-Aware Inference

The inference systems supported by real-time analytics platforms need to respond to changing demand within seconds, not hours. AWS MCP servers enable AI assistants to contextually scale inference capacity according to latency SLAs, cost constraints, and regional demand without compromising IAM and governance standards.

Event-Driven Intelligence Pipelines

MCP also supports coordination of event-driven AI processes across aws services such as AWS AppSync to provide real-time APIs, Amazon Cognito to support identity-conscious interactions, and DynamoDB to provide low-latency state storage. These trends are the pillars of Customer experience transformation efforts on a grand scale.

Governance, Auditability, and Enterprise Risk Control

The quickest route of operations failure is autonomy without governance. The main strength of AWS MCP servers is that they provide governance by design.

Every MCP-mediated action is:

- Authenticated by AWS Identity and Access Management (IAM)

- Logged through AWS CloudTrail

- Captured through centralized audit logging.

This provides a chain of custody of all the decisions made by AI agents. In the case of regulated industries, this architecture turns digital transformation solutions into enterprise-grade.

Also read: How Agentic AI Will Transform Enterprises in the Next 5 Years

Organizational Impact Across Engineering and Business Functions

Platform and Cloud Engineering

Platform teams move beyond script writing to specifying reusable MCP workflows. This standardization enables the teams of aws consulting partners to achieve uniform results in any setting and yet be able to accommodate continuous aws upgrades without re-architecturing systems.

AI and Data Teams

AI teams can have freedom without danger. The AI assistants have the ability to reason over infrastructure availability, data freshness, and latency constraints-accelerating experimentation and decreasing reliance on centralized ops teams.

Business Leadership

To executives leading Customer experience transformation, MCP offers assurance that AI systems can be deployed reliably throughout the aws cloud, backed with certified expertise and regulated implementation. That is why organizations are turning to an AWS partner with robust AWS certifications and MCP experience.

Cost Governance and Economic Sustainability

Unrestrained autonomy creates runaway spending. MCP facilitates cost-conscious execution by integrating budget thresholds and FinOps rules with orchestration logic.

This is particularly important where organizations implement solutions through the aws marketplace, where standardization, repeatability, and cost transparency directly influence ROI at cloud solutions portfolios.

Adoption Roadmap to Enterprise Leaders

- Visibility Phase

Facilitate read-only MCP integrations to pull infrastructure context and find automation candidates. - Controlled Execution Phase

Implement IAM boundaries and approval gates with SOP-based MCP workflows. - Scaled Intelligence Phase

Scale MCP to mission-critical AI workloads, with governance and cost control built-in.

This progressive model has become a best practice within established AWS consulting partner ecosystems.

How Our AWS Services Support MCP-Driven, Scalable AI Architectures

Creating AI at enterprise scale is not merely about deploying models - it is about creating cloud architectures that can evolve without supervision, can self-govern, and can scale without exceeding financial, security or operational constraints. Our AWS services are designed to enable organizations to operationalize AWS MCP Servers and agent-driven systems with some form of confidence.

Cloud Strategy & AI Architecture Advisory

We assist businesses to create MCP-native cloud strategies that are feasible and align with real-world constraints. From defining intent-driven orchestration models to embedding AWS Well-Architected principles, we guide platform teams in building future-ready AI foundations that scale responsibly.

AWS Migration for AI-Ready Workloads

Beyond lift-and-shift, we upgrade existing environments with AI training, inference, and analytics at scale. Our migration approach prepares workloads for MCP-based orchestration, providing flexibility, cost-efficiency and integrates well with additional services such as Amazon Bedrock.

Application Modernization for Agentic Systems

We transform legacy applications into cloud-native, MCP-compatible systems using microservices, containers, serverless architectures, and event-driven patterns. This enables AI agents to interact with infrastructure through governed intent rather than brittle service-level logic.

DevOps, MLOps & Autonomous Automation

We implement CI/CD, Infrastructure as Code (IaC), and MLOps pipelines designed for continuously running AI systems. By integrating MCP workflows into DevOps practices, we enable controlled autonomy—where AI can adapt infrastructure dynamically without compromising governance.

Cloud-Native AI Application Development

Our teams build scalable AI applications leveraging Amazon Bedrock, AWS AppSync, DynamoDB, and real-time analytics platforms. These solutions are designed to work seamlessly with MCP Servers, supporting high-throughput inference, contextual decision-making, and rapid experimentation.

Security, Governance & Cost Control by Design

Security and FinOps are embedded from day one. We enforce IAM boundaries, auditability through AWS CloudTrail, and budget-aware execution across MCP workflows—ensuring every autonomous AI action remains secure, observable, and economically sustainable.

How Our AWS Services Support MCP-Driven, Scalable AI Architectures

Building AI at enterprise scale isn’t just about deploying models—it’s about designing cloud architectures that can evolve autonomously, remain governed, and scale without breaking cost, security, or operational boundaries. Our AWS services are purpose-built to help organizations operationalize AWS MCP Servers and agent-driven systems with confidence.

Cloud Strategy & AI Architecture Advisory

We help enterprises design MCP-enabled cloud strategies that align AI ambition with real-world constraints. From defining intent-driven orchestration models to embedding AWS Well-Architected principles, we guide platform teams in building future-ready AI foundations that scale responsibly.

AWS Migration for AI-Ready Workloads

Beyond lift-and-shift, we modernize existing environments to support AI training, inference, and analytics at scale. Our migration approach prepares workloads for MCP-based orchestration, ensuring flexibility, cost efficiency, and seamless integration with services like Amazon Bedrock.

Application Modernization for Agentic Systems

We transform legacy applications into cloud-native, MCP-compatible systems using microservices, containers, serverless architectures, and event-driven patterns. This enables AI agents to interact with infrastructure through governed intent rather than brittle service-level logic.

DevOps, MLOps & Autonomous Automation

We implement CI/CD, Infrastructure as Code (IaC), and MLOps pipelines designed for continuously running AI systems. By integrating MCP workflows into DevOps practices, we enable controlled autonomy—where AI can adapt infrastructure dynamically without compromising governance.

Cloud-Native AI Application Development

Our teams build scalable AI applications leveraging Amazon Bedrock, AWS AppSync, DynamoDB, and real-time analytics platforms. These solutions are designed to work seamlessly with MCP Servers, supporting high-throughput inference, contextual decision-making, and rapid experimentation.

Security, Governance & Cost Control by Design

Security and FinOps are embedded from day one. We enforce IAM boundaries, auditability through AWS CloudTrail, and budget-aware execution across MCP workflows—ensuring every autonomous AI action remains secure, observable, and economically sustainable.

Strategic Outlook: MCP as the Control Plane for Agentic Enterprises

The industry is rapidly moving beyond asking what is model context protocol. The real question is how quickly organizations operationalize it.

Model Context Protocol, implemented through aws mcp servers, is emerging as the control plane for autonomous systems on the aws cloud. Enterprises that align MCP with Digital Engineering services, enterprise ai, and long-term digital transformation solutions will define the next generation of cloud-native intelligence.

Ready to Scale Your AI-Driven Cloud Strategy with Confidence?

Successive Digital, an Advanced AWS consulting partner and trusted expert in digital transformation solutions, can help you architect, implement, and optimize AWS MCP Servers for mission-critical AI workloads on the aws cloud.

From strategy and design to execution and governance, our team drives customer experience transformation and builds future-ready platforms that deliver measurable business impact.

Contact us to explore how you can accelerate your MCP adoption roadmap and unlock sustainable growth.

How do AWS MCP Servers help enterprises scale AI workloads on AWS?

AWS MCP Servers act as a governed orchestration layer between AI agents and AWS services, enabling secure, policy-aware scaling of training, inference, and analytics workloads. They allow enterprises to scale AI dynamically on the AWS Cloud while maintaining control, auditability, and alignment with AWS best practices.

What is Model Context Protocol, and why is it critical for enterprise AI?

The Model Context Protocol standardizes how AI assistants and AI agents interact with external systems. In enterprise environments, it enables structured, auditable access to infrastructure and data, reducing operational risk and helping organizations move beyond pilots to production-scale AI.

How do AWS MCP Servers support security, governance, and compliance?

AWS MCP Servers enforce governance by integrating with AWS Identity and Access Management (IAM) and capturing all actions through AWS CloudTrail and centralized audit logging. This ensures every AI-driven operation is secure, traceable, and compliant with enterprise and regulatory requirements.

Can AWS MCP Servers work with existing AWS services and platforms?

Yes. AWS MCP Servers integrate seamlessly with core AWS services such as AWS AppSync, Amazon Cognito, DynamoDB, and real-time analytics platforms. This allows enterprises to adopt MCP without redesigning their existing cloud architecture.

Who should consider adopting AWS MCP Servers, and when?

Organizations investing in enterprise AI, digital transformation solutions, or customer experience transformation should consider MCP early—especially those struggling to scale AI beyond pilots. Adoption is most effective during cloud modernization or AI platform standardization initiatives.

.avif)

.avif)

.jpg)